Hard skills are technical abilities such as curriculum development, data analysis, and educational leadership that ensure effective management and improvement of academic programs.

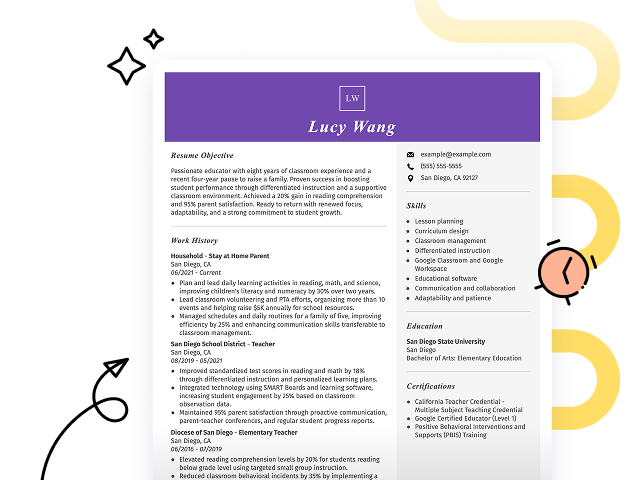

Popular Principal Resume Examples

Check out our top principal resume examples that showcase important skills like leadership, curriculum development, and communication. These examples will help you highlight your achievements effectively to attract potential employers.

Ready to design your standout resume? Our Resume Builder offers user-friendly templates specifically crafted for education professionals, making the process seamless and efficient.

Recommended

Entry-level principal resume

This entry-level resume highlights the job seeker's leadership skills and significant contributions to student enrollment and faculty performance. New professionals in this field must clearly demonstrate their ability to drive educational outcomes and implement effective programs, showcasing relevant achievements despite limited experience.

Mid-career principal resume

This resume effectively outlines key qualifications, showcasing a trajectory of increased leadership responsibilities. The job seeker's measurable achievements and advanced education demonstrate readiness for principal roles, emphasizing their capability to drive school improvement and foster a positive learning environment.

Experienced principal resume

This section demonstrates the applicant's strong leadership and impact in education, with key achievements such as increasing student enrollment by 15% and improving graduation rates by 12%. The bullet-point format improves readability, making it easy for hiring managers to quickly assess qualifications.

Resume Template—Easy to Copy & Paste

Yuki Martinez

Austin, TX 78705

(555)555-5555

Yuki.Martinez@example.com

Professional Summary

Dynamic Principal skilled in leadership, curriculum innovation, and boosting school performance. Expertise in managing budgets and improving school culture enhances educational environments.

Work History

Principal

Innovative Learning Academy - Austin, TX

March 2023 - Current

- Implemented new curriculum, boosting scores by 15%

- Managed 0k budget, optimizing resource allocation

- Led team of 50 staff, enhancing school culture

Assistant Principal

Greenfield High School - Austin, TX

March 2020 - February 2023

- Developed staff training, improving retention by 20%

- Coordinated safety protocols, reducing incidents by 30%

- Increased parent engagement, growing attendance by 25%

Educational Coordinator

Sunrise Charter School - Pinehill, TX

April 2018 - February 2020

- Designed student programs, enhancing key skills by 40%

- Facilitated teacher workshops, raising collaboration levels

- Improved campus facilities, achieving higher satisfaction

Skills

- Educational Leadership

- Curriculum Development

- Budget Management

- Staff Mentoring

- Teacher Training

- Student Engagement

- Resource Allocation

- Safety Protocols

Certifications

- Certified Educational Administrator - National Association of School Administrators

- Advanced Principal Certification - Educational Leadership Institute

Education

Master of Education Educational Leadership

University of Illinois Champaign, Illinois

May 2018

Bachelor of Arts Education

Illinois State University Normal, Illinois

May 2016

Languages

- Spanish - Beginner (A1)

- French - Beginner (A1)

- German - Intermediate (B1)

How to Write a Principal Resume Summary

Your resume summary is the first thing employers will see, making it important to create a lasting impression that highlights your qualifications for the principal role. As an educational leader, you should emphasize your experience in guiding teams, improving student performance, and fostering a positive school culture. To help clarify what makes an compelling summary, we’ll provide examples that illustrate effective strategies and common pitfalls:

I am an experienced principal with a solid background in education and leadership. I hope to find a position where I can use my skills to contribute positively to the school. A supportive environment that encourages professional development is what I seek. I believe I would make a great addition to the team if given the chance.

- The summary is vague and lacks specific achievements or qualifications related to the role of principal

- It emphasizes personal desires over what value the job seeker brings, which can weaken appeal to potential employers

- The language used is generic and does not convey enthusiasm or unique strengths relevant to educational leadership

Results-driven principal with over 10 years of experience in educational leadership, focused on improving student achievement and fostering a positive school culture. Successfully increased standardized test scores by 20% through the implementation of targeted instructional strategies and professional development for teachers. Proficient in data analysis, curriculum design, and engaging stakeholders to improve learning outcomes.

- Begins with specific years of experience and leadership focus

- Highlights quantifiable achievements that demonstrate significant impact on student performance

- Includes relevant skills that are essential for an effective principal role

Pro Tip

Showcasing Your Work Experience

The work experience section is important for a principal's resume, as it's where you will showcase the majority of your qualifications. Good resume templates always emphasize this section to highlight your leadership roles and accomplishments.

This section should be structured in reverse-chronological order, detailing your previous positions in education. Be sure to include bullet points that describe specific achievements and initiatives you've led in each role.

Now, let's look at a couple of examples that illustrate effective work history entries for principals. These examples will help you understand what resonates with hiring managers and what pitfalls to avoid:

Principal

Lincoln High School – San Francisco, CA

- Managed school operations

- Oversaw staff and student attendance

- Implemented educational programs

- Organized school events and activities

- Lacks specific details about leadership achievements or initiatives

- Bullet points do not highlight measurable impacts on student performance or engagement

- Description focuses on routine duties rather than innovative contributions or successes

Principal

Greenwood High School – Seattle, WA

August 2020 - Current

- Led the implementation of a new curriculum that increased student engagement by 30%, fostering a more interactive learning environment

- Established partnerships with local organizations to improve extracurricular programs, resulting in a 40% increase in student participation

- Implemented data-driven strategies to improve overall school performance, achieving a 15% rise in standardized test scores over two years

- Starts each bullet with compelling action verbs that clearly convey achievements

- Incorporates specific metrics and percentages to highlight tangible results from initiatives

- Demonstrates leadership and strategic thinking relevant to educational administration

While the resume summary and work experience are important parts of your application, don’t overlook the importance of other sections. Each element contributes to presenting a well-rounded picture of your qualifications. For thorough guidance, be sure to consult our detailed guide on how to write a resume.

Top Skills to Include on Your Resume

A skills section is important for any effective resume, as it quickly highlights your qualifications to potential employers. This allows you to make a strong first impression and demonstrate that you have what it takes for the role.

For a principal position, focus on hard skills that prove your expertise in the education field and soft skills that convey your professionalism and leadership abilities.

Soft skills, including communication, adaptability, and conflict resolution, foster collaboration among staff and create a positive learning environment for students.

Selecting the right resume skills is important for aligning with what employers expect from applicants. Many organizations use automated systems to filter out applicants lacking essential skills for the position.

To effectively highlight your strengths, review job postings carefully for insights on which skills are in demand. Tailoring your application in this way can help catch the attention of both recruiters and ATS systems alike.

Pro Tip

10 skills that appear on successful principal resumes

Highlighting key skills on your resume can significantly increase your chances of attracting the attention of recruiters for principal positions. Using resume examples as a guide shows how these skills are commonly featured, helping you present yourself confidently in applications.

By the way, consider incorporating any relevant skills from this curated list that match your background and job requirements:

Leadership

Strategic planning

Effective communication

Problem-solving

Financial acumen

Team development

Change management

Analytical thinking

Project management

Conflict resolution

Based on analysis of 5,000+ administrative professional resumes from 2023-2024

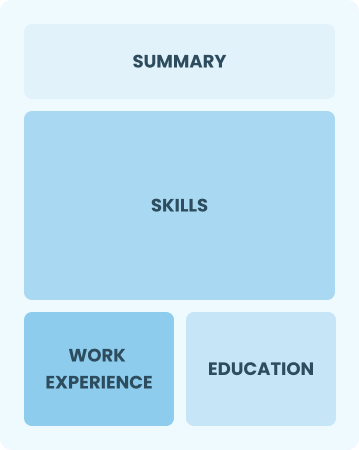

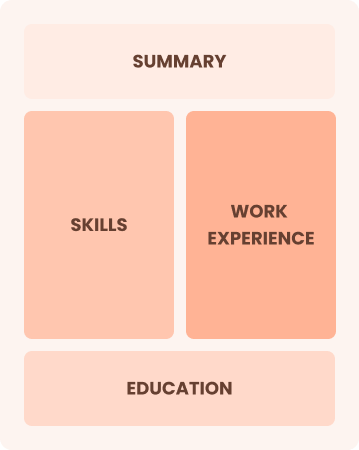

Resume Format Examples

Choosing the right resume format is important as it highlights your key skills, relevant experience, and career growth in a way that resonates with hiring managers.

Functional

Focuses on skills rather than previous jobs

Best for:

Recent graduates and career changers with up to two years of experience

Combination

Balances skills and work history equally

Best for:

Experienced educators highlighting leadership skills and potential for further growth

Chronological

Emphasizes work history in reverse order

Best for:

Experienced leaders excelling in the education sector

Frequently Asked Questions

Should I include a cover letter with my principal resume?

Absolutely, including a cover letter can significantly improve your application by showcasing your passion and qualifications. It gives you the chance to elaborate on your experiences and how they align with the job. For tips on crafting an effective cover letter, explore our comprehensive guide on how to write a cover letter. Alternatively, you can use our Cover Letter Generator for a quick start.

Can I use a resume if I’m applying internationally, or do I need a CV?

When applying for jobs outside the U.S., use a CV instead of a resume. A CV provides a comprehensive overview of your academic and professional history, which is often preferred by employers in many countries. To better understand formatting and style, review these CV examples. You can also explore tips on how to write a CV that aligns with international standards.

What soft skills are important for principals?

Soft skills like communication, empathy, and leadership are essential for principals. These interpersonal skills foster strong relationships with students, staff, and parents, creating a positive school environment that encourages collaboration and supports student success.

I’m transitioning from another field. How should I highlight my experience?

Highlight your transferable skills like communication, project management, and teamwork when applying for principal positions. These abilities illustrate your potential to lead effectively, even if your earlier roles were in different fields. Use concrete examples from past experiences to demonstrate how you've successfully navigated challenges similar to those faced in educational leadership.

Should I include a personal mission statement on my principal resume?

Yes, including a personal mission statement on your resume is recommended. It helps convey your core values and career aspirations effectively. This approach works best for organizations that emphasize alignment with their mission and culture, such as non-profits or companies focused on social impact.

How do I add my resume to LinkedIn?

To add your resume to LinkedIn and improve its visibility, upload it to your profile or highlight key achievements in the "About" and "Experience" sections. This approach allows recruiters and hiring managers to easily discover qualified applicants, increasing your chances of connecting with opportunities.