Hard skills include technical abilities such as machine operation, quality control inspection, and assembly techniques that ensure efficient production processes.

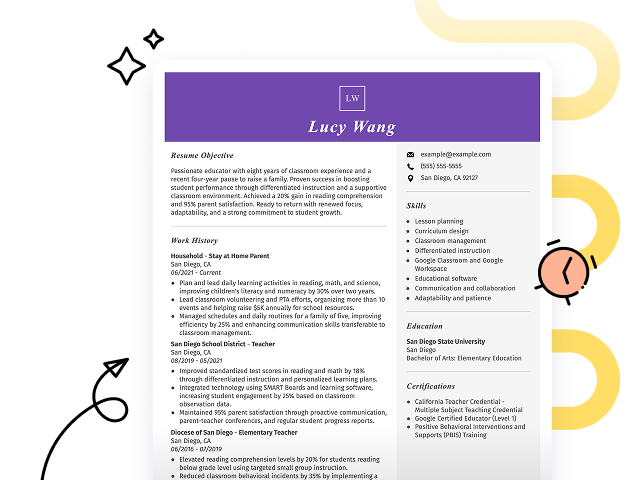

Popular Assembly Line Worker Resume Examples

Check out our top assembly line worker resume examples that showcase skills such as efficiency, attention to detail, and teamwork. These examples demonstrate how to present your achievements effectively in the manufacturing industry.

Looking to improve your resume? Our Resume Builder offers user-friendly templates specifically designed for assembly line professionals to help you stand out.

Recommended

Entry-level assembly line worker resume

This entry-level resume for an assembly line worker effectively highlights the job seeker's hands-on experience in optimizing production processes and maintaining high-quality standards. New professionals in this field must demonstrate their ability to collaborate within teams, adhere to safety protocols, and improve operational efficiency even with limited work history.

Mid-career assembly line worker resume

This resume effectively showcases the applicant's achievements and expertise in manufacturing, highlighting their ability to improve efficiency and safety. The structured presentation of qualifications illustrates a solid career trajectory, indicating readiness for leadership roles and more complex responsibilities in assembly line operations.

Experienced assembly line worker resume

This resume illustrates the applicant's extensive experience as an assembly line worker, highlighting their ability to assemble over 500 units daily with 99% accuracy. The clear formatting of achievements, such as reducing product errors by 15%, effectively communicates their productivity to potential employers.

Resume Template—Easy to Copy & Paste

Sophia Miller

Tacoma, WA 98407

(555)555-5555

Sophia.Miller@example.com

Professional Summary

Dynamic Assembly Line Worker with 7 years' expertise in boosting production efficiency, ensuring quality control, and optimizing processes. Proven track record in enhancing operations using effective safety protocols and industry practices.

Work History

Assembly Line Worker

Precision Components Manufacturing - Tacoma, WA

November 2023 - November 2025

- Enhanced efficiency by 15% through process improvements

- Reduced waste by 10% in assembly tasks

- Assisted in training 12 new employees

Production Technician

Innovative Production Solutions - Tacoma, WA

May 2020 - October 2023

- Operated machinery with 98% accuracy rate

- Improved product quality by 20% through inspections

- Collaborated on reducing downtime by 30 minutes

Machine Operator

Efficient Manufacturing Corp - Eastside, WA

May 2018 - April 2020

- Handled equipment with zero incidents

- Boosted production output by 25%

- Conducted safety checks weekly

Languages

- Spanish - Beginner (A1)

- French - Intermediate (B1)

- German - Beginner (A1)

Skills

- Assembly line processes

- Machinery operation

- Quality control

- Process optimization

- Safety protocols

- Team collaboration

- Problem-solving

- Time management

Certifications

- Certified Production Technician - Manufacturing Skill Standards Council

- Safety Compliance Training - National Safety Council

Education

Master's Degree Industrial Engineering

Illinois State University Normal, Illinois

December 2017

Bachelor's Degree Mechanical Engineering

University of Illinois Urbana-Champaign, Illinois

December 2014

How to Write a Assembly Line Worker Resume Summary

Your resume summary is the first thing employers notice, making it important to craft a strong and engaging introduction. For an assembly line worker, this section should emphasize your precision, efficiency, and teamwork skills.

Highlighting your experience with machinery and adherence to safety protocols will set you apart in this role. Focus on showcasing your ability to meet production goals while maintaining quality standards.

To guide you in creating an powerful summary, let's look at examples that illustrate effective strategies and common pitfalls:

I am a dedicated assembly line worker with years of experience in manufacturing. I am seeking a position where I can use my skills and help the company succeed. A job with good hours and opportunities for advancement is what I am looking for. I believe I can be a valuable member of your team if given the chance.

- Contains vague phrases about experience without specific achievements or skills mentioned

- Relies heavily on personal desires rather than showcasing how the job seeker will benefit the employer

- Uses generic terms like "dedicated" and "valuable" that do not illustrate unique qualities or contributions

Detail-oriented assembly line worker with over 7 years of experience in a fast-paced manufacturing environment, specializing in quality control and efficiency optimization. Improved production line speed by 20% through the implementation of lean manufacturing techniques, leading to a reduction in waste and increased productivity. Proficient in operating heavy machinery, conducting safety inspections, and collaborating with cross-functional teams to meet production targets.

- Begins with specific experience level and area of expertise relevant to assembly line work

- Highlights quantifiable achievements that showcase measurable impact on production efficiency

- Includes relevant technical skills that demonstrate capability in a manufacturing setting

Pro Tip

Showcasing Your Work Experience

The work experience section is important on your resume as an assembly line worker, where you'll present the majority of your skills and achievements. Good resume templates always emphasize this important section to capture your employment history effectively.

This area should be structured in reverse-chronological order, detailing each past position clearly. Use bullet points to highlight specific accomplishments and tasks you performed in various roles to showcase your efficiency and contributions.

Next, we will provide examples that illustrate successful work history entries for assembly line workers. These examples will help clarify what elements make a strong impression and what pitfalls to avoid:

Assembly Line Worker

Global Manufacturing Corp – Detroit, MI

- Assembled parts on the line.

- Followed safety protocols.

- Worked with a team to meet quotas.

- Kept work area clean and organized.

- Lacks employment dates which are essential for context

- Bullet points do not highlight any specific achievements or skills

- Describes routine tasks instead of showcasing impact or measurable outcomes

Assembly Line Worker

Automotive Solutions Inc. – Detroit, MI

March 2020 - Current

- Efficiently operate machinery to assemble automotive parts, achieving a 15% increase in production speed over the last year.

- Maintain strict adherence to safety protocols, contributing to a zero-accident record for over six months.

- Collaborate with team members to streamline processes, resulting in a reduction of assembly errors by 20%.

- Starts each bullet with effective action verbs that highlight key achievements

- Incorporates specific metrics demonstrating the applicant's contributions and effectiveness

- Showcases relevant skills such as teamwork and safety awareness that are important for the role

While your resume summary and work experience take center stage, don't overlook the importance of other sections. Each part plays a critical role in showcasing your qualifications. For more detailed guidance on crafting a standout resume, be sure to explore our comprehensive guide on how to write a resume.

Top Skills to Include on Your Resume

A skills section is important on your resume as it allows you to showcase your qualifications at a glance. This highlights your ability to meet the specific demands of the assembly line worker role effectively.

For this position, emphasize both technical skills and soft skills. Mention tools like conveyor belts, assembly machinery, and quality control software that demonstrate your capability in handling essential tasks on the line. The most critical soft skills are unwavering attention to detail to ensure product quality, disciplined focus to maintain the speed of the line, and collaborative teamwork to communicate issues quickly and maintain efficient flow with colleagues.

Soft skills encompass interpersonal attributes like teamwork, attention to detail, and adaptability which foster a cooperative work environment and improve overall productivity on the assembly line.

When selecting your resume skills, it's important to align them with what employers expect from a job seeker. Many organizations rely on automated systems to filter out applicants lacking essential skills for the job.

To improve your chances of getting noticed, take the time to review job postings carefully. They often highlight key skills that recruiters prioritize, ensuring your resume stands out and meets ATS requirements.

Pro Tip

10 skills that appear on successful assembly line worker resumes

Highlighting essential skills on your resume can grab the attention of hiring managers looking for assembly line workers. Our resume examples showcase these high-demand skills, enabling you to apply with confidence and stand out from the competition.

Consider adding these 10 relevant skills to your resume if they align with your experience and job requirements:

Attention to detail

Team collaboration

Mechanical aptitude

Time management

Safety awareness

Problem-solving abilities

Ability to follow instructions

Quality control techniques

Adaptability

Basic math skills

Based on analysis of 5,000+ production professional resumes from 2023-2024

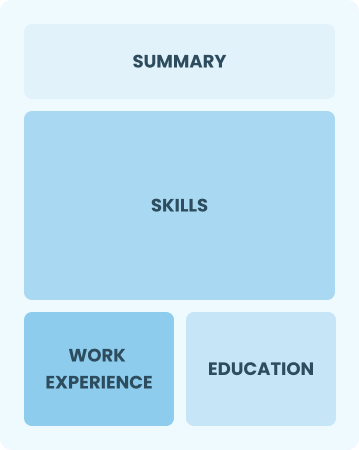

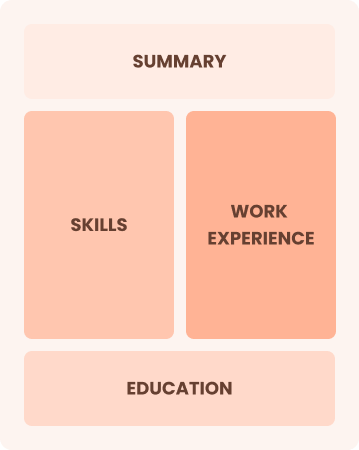

Resume Format Examples

Choosing the right resume format is important for assembly line workers because it highlights your technical skills, relevant experience, and career growth in a clear way.

Functional

Focuses on skills rather than previous jobs

Best for:

Recent graduates or career changers with limited hands-on experience

Combination

Balances skills and work history equally

Best for:

Mid-career professionals eager to demonstrate their skills and potential for growth

Chronological

Emphasizes work history in reverse order

Best for:

Seasoned assembly line workers excelling in efficiency and team leadership

Frequently Asked Questions

Should I include a cover letter with my assembly line worker resume?

Absolutely. Including a cover letter is a great way to highlight your skills and showcase your enthusiasm for the assembly line worker position. It allows you to personalize your application and connect with recruiters on a deeper level. If you need assistance, our guide on how to write a cover letter can be very helpful, or try our Cover Letter Generator for quick results.

Can I use a resume if I’m applying internationally, or do I need a CV?

When applying for jobs abroad, use a CV instead of a resume to align with local customs. A CV typically provides a comprehensive overview of your academic and professional history. Check out CV examples to see how others have structured their documents, and visit our guide on how to write a CV for tips on formatting that meets international expectations.

What soft skills are important for assembly line workers?

Soft skills like communication, teamwork, and problem-solving are essential for assembly line workers. These interpersonal skills foster collaboration among team members, improve efficiency, and help resolve issues quickly, ultimately contributing to a smoother production process and a positive work environment.

I’m transitioning from another field. How should I highlight my experience?

Highlight your transferable skills such as teamwork, attention to detail, and efficiency from previous roles. These abilities demonstrate your capability to thrive in an assembly line environment, even if you lack direct experience. Use concrete examples from past jobs to illustrate how you've successfully met deadlines and maintained quality standards, showcasing your readiness to excel in this position.

How do I write a resume with no experience?

If you're applying for an assembly line worker position but lack formal experience, consider using a resume with no experience format to highlight any hands-on skills you've gained through school projects, internships, or volunteer work. Focus on your attention to detail, ability to follow instructions, and teamwork skills. Employers value reliability and a strong work ethic, so showcase your eagerness to learn and contribute.

How do I add my resume to LinkedIn?

To improve your resume's visibility on LinkedIn, add your resume to LinkedIn directly to your profile or highlight key skills and experiences in the "About" and "Experience" sections. This approach helps recruiters find assembly line workers with relevant qualifications more easily, increasing your chances of landing job opportunities.