When a software engineer is working to master technical proficiencies, they focus on hard skills such as programming languages, software development methodologies, and system architecture.

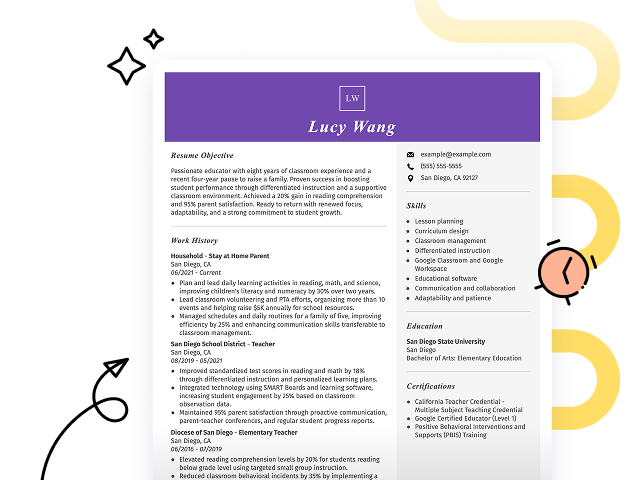

Popular Software Engineer Resume Examples

Check out our top software engineer resume examples that showcase important skills such as programming languages, problem-solving abilities, and project management experience. These examples can help you effectively highlight your accomplishments to potential employers.

Ready to build your standout resume? Our Resume Builder offers user-friendly templates specifically designed for tech professionals, making the process seamless and efficient.

Recommended

Entry-level software engineer resume

This entry-level resume for a software engineer highlights the applicant's strong technical skills and significant achievements from previous roles, including notable contributions to app performance and team collaboration. New professionals in this field must demonstrate their adaptability and problem-solving abilities through relevant projects and certifications that showcase their readiness for real-world challenges.

Mid-career software engineer resume

This resume effectively showcases qualifications through quantifiable achievements and leadership roles, demonstrating the applicant's progressive career trajectory. The structured presentation of skills and accomplishments indicates readiness for complex challenges and greater responsibilities in software engineering.

Experienced software engineer resume

This work experience section demonstrates the applicant's extensive experience as a software engineer, highlighting achievements such as reducing project delivery lead time by 30% and increasing user engagement by 40%. The bullet-point format improves readability, making it easy for hiring managers to grasp key accomplishments quickly.

Resume Template—Easy to Copy & Paste

Li Smith

Brookfield, WI 53008

(555)555-5555

Li.Smith@example.com

Skills

- Python programming

- Java development

- SQL databases

- API design

- Agile methodologies

- Cloud services

- Machine learning

- Code optimization

Languages

- Spanish - Beginner (A1)

- French - Beginner (A1)

- German - Beginner (A1)

Professional Summary

Software Engineer with 8 years' experience in enhancing application performance and boosting team efficiency. Expert in Python and Java, leveraging AI and machine learning for innovative solutions.

Work History

Software Engineer

Innovatech Solutions - Brookfield, WI

June 2023 - October 2025

- Enhanced app performance by 30%

- Led a team of 5 developers

- Streamlined code base with 40% less duplication

Backend Developer

TechWave Dynamics - Brookfield, WI

January 2020 - May 2023

- Improved system reliability by 25%

- Automated testing, reducing bugs by 50%

- Developed APIs used by 10K users

Junior Programmer

CodeCrafters Inc. - Milwaukee, WI

January 2017 - December 2019

- Reduced load time of apps by 20%

- Supported deployment of 12 projects

- Optimized database queries by 35%

Certifications

- Certified Software Developer - National Software Association

- Advanced Python Programmer - Tech Institute

Education

Master's Degree Computer Science

Stanford University Stanford, CA

June 2017

Bachelor's Degree Software Engineering

University of California Berkeley, CA

June 2015

How to Write a Software Engineer Resume Summary

Your resume summary is important as it’s the first thing employers will see. It sets the tone for your application and can make a lasting impression if crafted well.

As a software engineer, emphasize your technical skills, problem-solving abilities, and any notable projects or achievements. This is your chance to showcase what makes you stand out in the field.

The following examples will guide you on what an effective summary looks like and how to avoid common pitfalls:

I am a dedicated software engineer with several years of experience and a passion for technology. I hope to find a position where I can use my skills effectively while enjoying a good work-life balance. I believe that my background in programming could help your team achieve its goals if given the chance.

- Uses vague phrases like "several years of experience" without specifying technologies or achievements

- Emphasizes personal desires instead of detailing what value the job seeker brings to potential employers

- Lacks specificity and concrete examples that would demonstrate technical skill and problem-solving abilities

Proactive software engineer with 7+ years of experience in full-stack development, excelling in creating scalable web applications. Spearheaded a project that improved system efficiency by 30% through optimized algorithms and reduced server response time by 50%. Proficient in JavaScript, Python, and cloud computing technologies, with strong problem-solving skills to deliver robust software solutions.

- Begins with clear experience level and area of expertise

- Highlights quantifiable achievements that demonstrate technical impact on product performance

- Mentions specific programming languages and technologies relevant to the role

Pro Tip

Showcasing Your Work Experience

The work experience section is the centerpiece of your resume as a software engineer. This is where you’ll provide the bulk of your content, and good resume templates always incorporate this essential section.

Organize this part in reverse-chronological order, clearly listing your previous roles. Use bullet points to highlight key achievements and contributions that demonstrate your skills and impact in each position.

To further clarify what makes an effective work history entry for software engineers, we’ve prepared a couple of examples. These examples illustrate what catches attention and what should be avoided:

Software Engineer

Tech Innovators Inc. – San Francisco, CA

- Wrote code for applications.

- Fixed bugs and tested software.

- Collaborated with team members.

- Participated in meetings to discuss projects.

- Lacks specific details about the technologies used or projects worked on

- Bullet points are vague and do not showcase any significant achievements

- Focuses on routine tasks instead of highlighting contributions or measurable outcomes

Software Engineer

Tech Innovations Inc. – San Francisco, CA

June 2020 - Current

- Developed and optimized scalable web applications using React and Node.js, improving load times by 30%.

- Implemented automated testing frameworks that reduced bugs in production by 40%, improving overall software quality.

- Collaborated with cross-functional teams to design user-centric features, leading to a 20% increase in user engagement.

- Starts each bullet with effective action verbs that clearly demonstrate contributions.

- Incorporates specific metrics to quantify achievements, providing clear evidence of success.

- Highlights relevant technical skills while showcasing collaborative efforts and outcomes.

While your resume summary and work experience are critical components, it's essential to remember that other sections deserve careful consideration as well. For detailed insights on effectively crafting your resume, be sure to explore our comprehensive guide on how to write a resume.

Top Skills to Include on Your Resume

A well-defined skills section is important for any software engineer's resume. It allows you to quickly demonstrate your qualifications and catch the eye of hiring managers seeking specific expertise.

Hiring managers look for well-rounded candidates, so your resume should include a healthy mix of hard and soft skills.

Equally important are soft skills, which encompass interpersonal abilities like problem-solving, teamwork, and effective communication to foster collaboration and innovation in project development.

Selecting the right resume skills is important to align with what employers expect from job seekers. Many organizations use automated screening systems that filter out those who lack essential skills for the position.

To effectively showcase your qualifications, closely examine job postings for insights into which skills are most valued. Tailoring your resume to appeal to both recruiters and ATS algorithms will help increase your chances of landing an interview.

Pro Tip

10 skills that appear on successful software engineer resumes

Highlighting essential skills in your resume is key to capturing the attention of hiring managers for software engineer positions. These sought-after skills, as shown in our resume examples, provide you with the confidence to apply effectively.

Here are 10 skills you should consider including in your resume if they align with your qualifications and job requirements:

Data analysis

Sql

Object-oriented programming

Microsoft .net

Database management

Code debugging

Business intelligence

Mysql

Web technologies

Javascript

Based on analysis of 5,000+ engineering professional resumes from 2023-2024

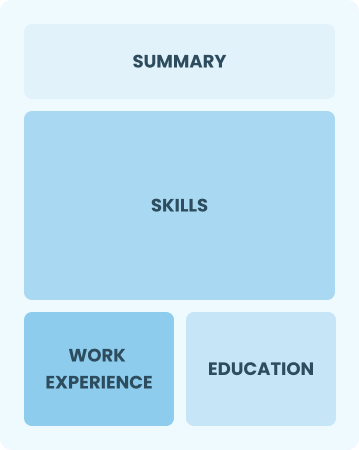

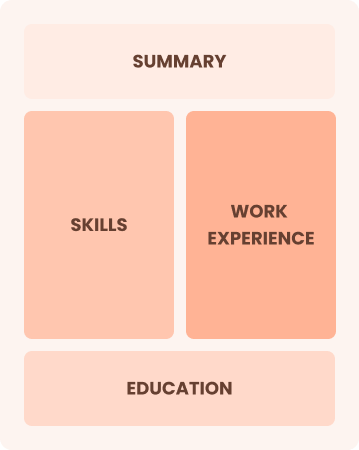

Resume Format Examples

Choosing the right resume format is important for software engineers as it showcases your technical abilities, relevant projects, and career growth in a clear and effective manner.

Functional

Focuses on skills rather than previous jobs

Best for:

Recent graduates and career changers with up to two years of experience

Combination

Balances skills and work history equally

Best for:

Mid-career professionals focused on demonstrating their skills and potential for growth

Chronological

Emphasizes work history in reverse order

Best for:

Seasoned engineers excelling in team leadership and innovative solutions

Frequently Asked Questions

Should I include a cover letter with my software engineer resume?

Absolutely, including a cover letter can significantly improve your application by showcasing your unique qualifications and enthusiasm for the position. It allows you to present your story beyond the resume. If you're seeking assistance, consider our comprehensive guide on how to write a cover letter or use our Cover Letter Generator for quick and easy creation.

Can I use a resume if I’m applying internationally, or do I need a CV?

When applying for jobs abroad, use a CV instead of a resume. A CV provides a comprehensive overview of your academic and professional history, which is often required in international markets. Explore our CV examples to see how others have successfully formatted their documents. Additionally, learn about how to write a CV tailored to global expectations.

What soft skills are important for software engineers?

Soft skills like communication, teamwork, and problem-solving are essential for software engineers. These interpersonal skills help foster effective collaboration with team members and clients, ensuring projects progress efficiently and innovations are implemented successfully.

I’m transitioning from another field. How should I highlight my experience?

Highlight your transferable skills, such as teamwork, analytical thinking, and project management, when applying for software engineer roles. These abilities illustrate your potential to excel in tech environments despite limited direct experience. Provide examples from previous positions where you successfully solved problems or led projects, showcasing how these experiences align with the demands of software engineering.

Should I use a cover letter template?

Certainly! Here's the revised content with the embedded link:

Yes, using a cover letter template tailored for software engineering can improve your application by providing a clear structure that highlights your technical skills, such as coding skill and problem-solving abilities, while effectively organizing your relevant project experiences to catch the hiring manager's attention.

Should I include a personal mission statement on my software engineer resume?

Yes, including a personal mission statement on your resume is recommended. It effectively communicates your values and career aspirations, especially when applying to tech companies that prioritize innovation and culture fit. This personalized touch can resonate well with organizations seeking alignment with their core values.