Hard skills include technical abilities like project scheduling, risk management, and software skill, which are important for a technical project manager to lead successful projects.

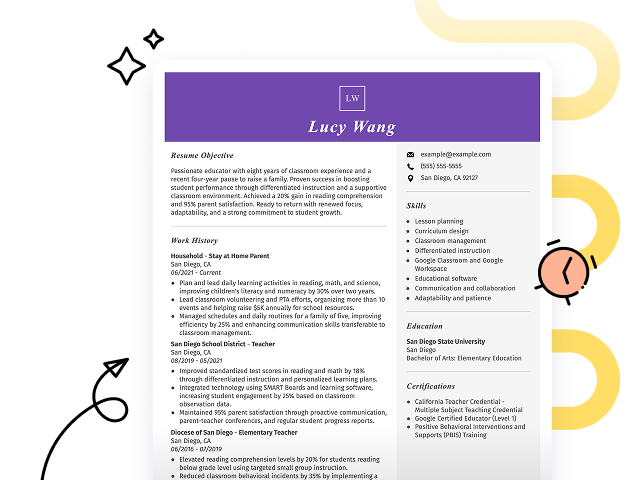

Popular Technical Project Manager Resume Examples

Discover our top technical project manager resume examples that showcase essential skills such as agile methodology, stakeholder communication, and risk management. These examples are designed to help you effectively highlight your achievements and attract potential employers.

Ready to build your standout resume? With Resume Builder, you can access user-friendly templates specifically tailored for project management professionals, making the process simple and efficient.

Recommended

Entry-level technical project manager resume

This entry-level resume for a technical project manager effectively highlights the job seeker's leadership abilities and project management competencies through quantifiable achievements in previous roles. New professionals in this field must demonstrate their capacity to manage projects effectively, as well as their familiarity with relevant methodologies and tools, even if they lack extensive experience.

Mid-career technical project manager resume

This resume effectively showcases the job seeker's extensive experience and leadership in technical project management. The clear presentation of achievements, such as budget oversight and team supervision, demonstrates readiness for advanced roles and complex project challenges in a dynamic environment.

Experienced technical project manager resume

This work history section highlights the applicant's robust experience as a Technical Project Manager, showcasing impressive achievements like reducing operational costs by 20% through a merger project. The use of bullet points improves readability, making it easy to identify key accomplishments and their impact on productivity.

Resume Template—Easy to Copy & Paste

Emily Liu

Northwood, OH 43626

(555)555-5555

Emily.Liu@example.com

Professional Summary

Dynamic Technical Project Manager with 4 years of experience in leading cross-functional teams, ensuring on-time project delivery, and optimizing project processes. Proficient in Agile methodologies and data-driven decision-making, aiming to improve project outcomes.

Work History

Technical Project Manager

Innovatech Solutions - Northwood, OH

January 2023 - January 2026

- Led cross-functional team, boosting sales by 25%.

- Managed projects under M budget efficiently.

- Delivered 10+ projects on time, enhancing market reach.

IT Project Coordinator

TechWave Systems - Cincinnati, OH

January 2021 - December 2022

- Coordinated 15+ project schedules, yielding 20% efficiency.

- Facilitated vendor contracts, reducing costs by 250K.

- Streamlined reporting, enhancing data accuracy by 30%.

Junior Project Analyst

Digital Dynamics Inc. - Cincinnati, OH

January 2020 - December 2020

- Analyzed data, improving process efficiency by 15%.

- Supported project rollout, increasing speed by 20%.

- Developed reports boosting stakeholder engagement 40%.

Languages

- Spanish - Beginner (A1)

- Mandarin - Intermediate (B1)

- French - Beginner (A1)

Skills

- Agile Project Management

- Stakeholder Communication

- Risk Management

- Budgeting & Forecasting

- Team Leadership

- Process Improvement

- Cross-Functional Collaboration

- Data Analysis

Certifications

- Certified ScrumMaster - Scrum Alliance

- PMP Certification - Project Management Institute

- ITIL Foundation - AXELOS

Education

Master of Science Project Management

Stanford University Stanford, California

June 2019

Bachelor of Science Information Systems

University of California, Berkeley Berkeley, California

June 2018

How to Write a Technical Project Manager Resume Summary

Your resume summary is your chance to make a lasting impression on hiring managers. It should clearly highlight your ability to manage technical projects and lead cross-functional teams effectively.

As a technical project manager, it’s essential to showcase your expertise in project lifecycle management, communication skills, and problem-solving abilities. This section should reflect your unique qualifications that set you apart from other applicants.

To illustrate what makes an effective summary, here are some examples that will clarify effective strategies and common pitfalls in crafting this vital component of your resume:

I am an experienced technical project manager looking for a new opportunity. I have worked on many projects and hope to find a position that allows me to use my skills effectively. I value teamwork and am eager to contribute positively to the company's success.

- Lacks specific details about the applicant’s experience and achievements, making it generic

- Emphasizes personal desires rather than articulating what benefits the applicant brings to potential employers

- Uses weak language like 'hope' and 'eager,' which does not convey confidence in their abilities

Results-driven technical project manager with over 7 years of experience leading cross-functional teams in delivering complex IT projects on time and within budget. Successfully increased project delivery efficiency by 20% through the implementation of Agile methodologies and streamlined communication channels. Proficient in project management tools such as JIRA, MS Project, and stakeholder engagement strategies to ensure alignment with business objectives.

- Begins with a clear statement of experience level and relevant industry focus

- Highlights quantifiable achievements that underline efficiency improvements, demonstrating direct impact on project success

- Mentions specific technical skills and methodologies relevant to project management roles

Pro Tip

Showcasing Your Work Experience

The work experience section is the cornerstone of your resume as a technical project manager, and resume templates always emphasize its importance. This area will contain the bulk of your content.

Organize this section in reverse-chronological order, detailing your past positions. Use bullet points to succinctly highlight achievements and contributions in each role.

To illustrate effective strategies for presenting your work history, we’ll share examples that demonstrate what makes an entry successful and what pitfalls to avoid.

Technical Project Manager

Tech Innovations Inc. – San Francisco, CA

- Managed project timelines and resources

- Coordinated with team members

- Created reports for stakeholders

- Ensured project met basic requirements

- Lacks specific employment dates to provide context

- Bullet points are too general and do not showcase leadership skills

- Emphasizes routine tasks rather than highlighting successful project outcomes

Technical Project Manager

Innovatech Solutions – San Francisco, CA

March 2020 - Current

- Lead cross-functional teams to deliver software projects on time, achieving a 30% increase in project efficiency

- Implement agile methodologies, resulting in a 40% reduction in delivery timelines and improved team collaboration

- Facilitate stakeholder communication to ensure alignment on project goals, improving client satisfaction ratings by 15%

- Each bullet point starts with an action verb that highlights the job seeker's contributions

- Metrics are included to showcase tangible results from the job seeker's efforts

- Relevant skills such as leadership and communication are emphasized through specific examples

While your resume summary and work experience sections are important, don’t overlook the importance of other areas. Each section contributes to a well-rounded presentation of your skills and qualifications. For additional insights, be sure to explore our complete guide on how to write a resume.

Top Skills to Include on Your Resume

Including a skills section on your resume is important as it provides a snapshot of your qualifications. This area allows job seekers to present their most relevant abilities, making it easier for employers to see potential fit.

For hiring managers, this section serves as an efficient way to evaluate applicants against role requirements. Technical project manager professionals should highlight both technical and interpersonal skills, which will be elaborated on in the following sections.

Soft skills are essential for technical project managers, as they foster effective communication, teamwork, and problem-solving. These abilities ultimately lead to successful project delivery and team cohesion.

When selecting skills for your resume, it's essential to align them with what employers expect from applicants. Many organizations use automated screening systems that filter out applicants lacking essential resume skills.

To ensure you stand out, carefully read job postings and note the skills highlighted by recruiters. This practice will guide you in prioritizing relevant abilities that improve your chances of passing through both human and automated evaluations.

Pro Tip

10 skills that appear on successful technical project manager resumes

Improve your resume to capture the attention of recruiters by highlighting key skills that are highly sought after in technical project management. Our resume examples show these essential skills, helping you apply confidently for your next position.

Here are 10 skills you should think about adding to your resume if they align with your experience and job specifications:

Agile methodology

Risk management

Budgeting and forecasting

Stakeholder engagement

Communication skill

Leadership abilities

Problem-solving aptitude

Time management

Technical documentation

Team collaboration

Based on analysis of 5,000+ management professional resumes from 2023-2024

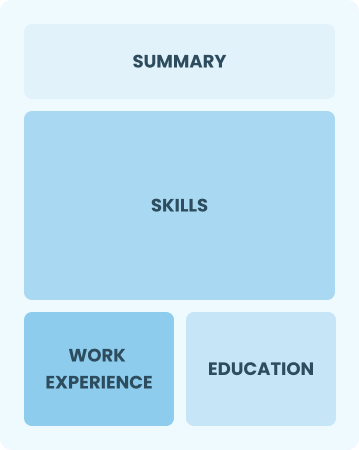

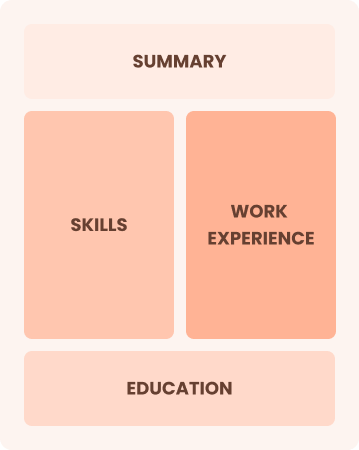

Resume Format Examples

Selecting the appropriate resume format is important for a technical project manager as it emphasizes key skills, relevant experience, and career growth, making your qualifications stand out to potential employers.

Functional

Focuses on skills rather than previous jobs

Best for:

Recent graduates and career changers with up to two years of experience

Combination

Balances skills and work history equally

Best for:

Mid-career professionals focused on showcasing their skills and advancing their careers

Chronological

Emphasizes work history in reverse order

Best for:

Leaders in technical project management with extensive experience

Frequently Asked Questions

Should I include a cover letter with my technical project manager resume?

Absolutely, including a cover letter can significantly improve your application. It allows you to showcase your personality and highlight why you're the perfect fit for the position. If you’re not sure how to write a cover letter, our guide is a great resource. Alternatively, try our Cover Letter Generator to help you create a professional document in just minutes.

Can I use a resume if I’m applying internationally, or do I need a CV?

When applying for jobs outside the U.S., use a CV instead of a resume. A CV offers a comprehensive view of your academic and professional history, which is often preferred internationally. Explore our resources on how to write a CV and see CV examples to ensure your application meets local expectations.

What soft skills are important for technical project managers?

Soft skills like communication, adaptability, and interpersonal skills are important for a technical project manager. These abilities foster collaboration among team members, ensuring successful project delivery while navigating challenges effectively.

I’m transitioning from another field. How should I highlight my experience?

When applying for technical project manager roles, highlight your transferable skills like project coordination, communication, and adaptability. Even if you lack direct experience in tech, showcase how you've successfully led teams or managed projects across different sectors. Use concrete examples to illustrate how these experiences align with the responsibilities of a technical project manager.

Where can I find inspiration for writing my cover letter as a technical project manager?

If you're preparing to apply for technical project manager roles, consider exploring our expertly crafted cover letter examples. These samples can provide valuable insights into effective formatting, content ideas, and ways to showcase your qualifications confidently.

Should I include a personal mission statement on my technical project manager resume?

Yes, including a personal mission statement on your resume is advisable. It effectively showcases your core values and career aspirations, making a strong impact on potential employers, particularly those in innovative or purpose-driven industries that align with your vision.