Hard skills include analytical abilities, project management skill, and data interpretation.

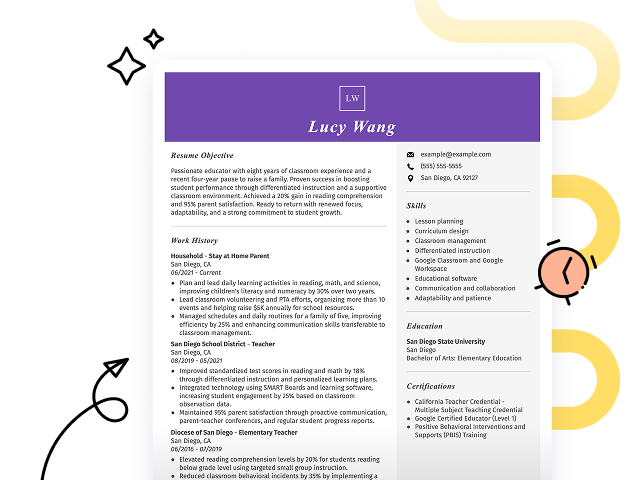

Popular Consultant Resume Examples

Check out our top consultant resume examples that emphasize key skills such as strategic planning, problem-solving, and effective communication. These examples will guide you in showcasing your accomplishments to attract potential employers.

Ready to build your unique resume? Our Resume Builder offers user-friendly templates specifically designed for consultants, helping you make a strong impression in your job applications.

Recommended

Customer service consultant resume

The resume showcases a clean layout and professional resume fonts that improve readability. These design elements make the job seeker's achievements clear and notable, helping to create a strong initial impression on potential employers.

Client relations consultant resume

This resume effectively combines key skills such as client retention strategies and CRM software optimization with relevant work experience. This approach allows employers to clearly see how the job seeker's technical expertise has led to tangible results in previous roles, improving their understanding of the job seeker's overall capabilities.

Customer experience consultant resume

This resume skillfully uses bullet points to highlight the applicant's achievements, making it easy for hiring managers to quickly identify key contributions. Strategic spacing and clear section headings improve readability, ensuring that extensive experience is presented concisely on a single page without clutter.

Resume Template—Easy to Copy & Paste

Hiro Park

Silverlake, WA 98292

(555)555-5555

Hiro.Park@example.com

Professional Summary

Dynamic Consultant with 4 years' experience in enhancing client operations, driving project success, and leveraging data expertise to optimize business strategies.

Work History

Consultant

Strategic Insight Group - Silverlake, WA

May 2024 - October 2025

- Improved client efficiency by 20% through analysis.

- Led projects generating 0K revenue.

- Streamlined processes reducing costs by 15%.

Business Analyst

Tech Innovations Co. - Eastside, WA

May 2022 - April 2024

- Enhanced data reporting accuracy by 25%.

- Facilitated cross-department collaboration.

- Managed budgets, decreasing expenses by 10%.

Market Research Analyst

Global Commerce Ltd. - Eastside, WA

October 2021 - April 2022

- Analyzed trends boosting client sales by 8%.

- Developed surveys increasing responses by 30%.

- Synthesized reports for executive insights.

Skills

- Analytical thinking

- Project management

- Data analysis

- Process improvement

- Business strategy

- Market research

- Client communication

- Financial modeling

Education

Master's Degree Business Administration

University of Washington Seattle, WA

June 2021

Bachelor's Degree Economics

University of Oregon Eugene, OR

June 2019

Certifications

- Certified Management Consultant - Institute of Management Consultants USA

- Project Management Professional - Project Management Institute

Languages

- Spanish - Beginner (A1)

- French - Beginner (A1)

- Mandarin - Intermediate (B1)

How to Write a Consultant Resume Summary

Your resume summary is the first thing employers will see, so it’s important to make a powerful impression that showcases your fit for the consultant role. This section should present your analytical skills and problem-solving abilities, demonstrating how you can add value to potential clients.

As a consultant, you need to highlight your expertise in strategic planning and project management while emphasizing your communication skills. These attributes will set you apart in this competitive field.

To guide you in crafting an powerful summary, we’ll provide examples that illustrate what works well and what doesn’t:

I am an experienced consultant looking for a new opportunity where I can use my skills to help a company succeed. I have worked in various industries and am eager to find a position that offers good benefits and a chance for advancement. I believe I can contribute positively if given the chance.

- Lacks concrete examples of skills or accomplishments, making it hard to assess the applicant's value

- Emphasizes personal desires rather than highlighting how the applicant can benefit potential employers

- Uses generic phrases that do not distinguish the applicant from others in the field

Results-driven consultant with 7+ years of experience in strategic planning and operational improvement across various industries. Successfully led a project that increased client revenue by 20% through improved market analysis and innovative solution implementation. Proficient in data analytics, stakeholder engagement, and process optimization to drive organizational efficiency.

- Begins with clear experience duration and areas of expertise

- Highlights a quantifiable achievement that illustrates direct impact on client success

- Showcases relevant technical skills essential for consulting roles

Pro Tip

Showcasing Your Work Experience

The work experience section of your resume is important for a consultant, as it will hold the bulk of your content. Good resume templates always highlight this key area to ensure it's easy to navigate.

This section should be organized in reverse-chronological order, listing your previous consulting roles. Use bullet points to effectively communicate your achievements and the impact you've made in each position.

To further assist you, we’ll present a couple of examples that illustrate successful work history entries for consultants. These examples will clarify what effective resumes look like and what pitfalls to avoid:

Consultant

Tech Solutions Inc. – New York, NY

- Provided advice to clients.

- Attended meetings and took notes.

- Collaborated with team members.

- Researched industry trends and shared insights.

- Lacks specific details about the types of consulting provided

- Bullet points are overly generic and do not highlight unique skills or contributions

- Does not mention any measurable outcomes or successes from consulting efforts

Consultant

Global Solutions Inc. – New York, NY

March 2020 - Present

- Develop tailored strategies for clients in various sectors, improving operational efficiency by an average of 30%.

- Lead cross-functional teams to execute projects on time and within budget, achieving a 95% client satisfaction rate.

- Conduct comprehensive market analysis to identify growth opportunities, resulting in a 40% increase in revenue for key clients.

- Starts each bullet with powerful action verbs that clearly convey the applicant's impact

- Incorporates specific percentages and metrics to quantify achievements

- Highlights essential skills relevant to consulting while showcasing measurable results

While your resume summary and work experience are important elements, don’t overlook the importance of other sections that contribute to a well-rounded presentation. For more detailed insights on crafting a strong resume, refer to our comprehensive guide on how to write a resume.

Top Skills to Include on Your Resume

A skills section is important for any resume because it allows you to quickly showcase your qualifications to potential employers. By highlighting your strengths, this section makes it easier for hiring managers to see how you fit the role.

Your application will be more competitive if your resume effectively balances your hard skills and soft skills.

Consultants must master these to effectively solve client challenges. Soft skills encompass strong communication, adaptability, and relationship-building capabilities, which are essential for fostering collaboration and understanding client needs in a dynamic environment.

Choosing the right resume skills is important to align with what employers expect. Many organizations use automated screening tools that filter out applicants lacking essential skills for the position.

To improve your chances of being noticed, carefully review job postings to identify which skills are emphasized. This approach will help you prioritize the most relevant abilities on your resume, ensuring it resonates with both recruiters and ATS systems alike.

Pro Tip

10 skills that appear on successful consultant resumes

Highlighting sought-after skills in your resume is key to attracting recruiters' attention for consultant roles. You can see how resume examples effectively present these skills, providing you with a strong foundation for your job applications.

By the way, consider incorporating relevant skills from the following list when they align with your experience and the job requirements:

Analytical thinking

Project management

Effective communication

Problem-solving

Team collaboration

Strategic planning

Data analysis

Client relationship management

Adaptability

Time management

Based on analysis of 5,000+ customer service professional resumes from 2023-2024

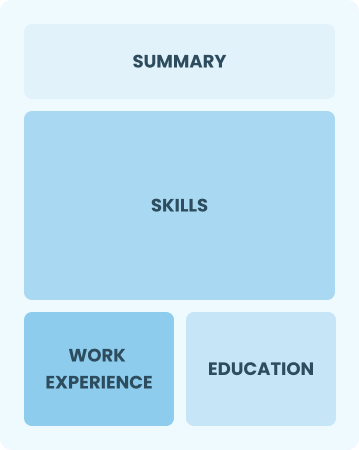

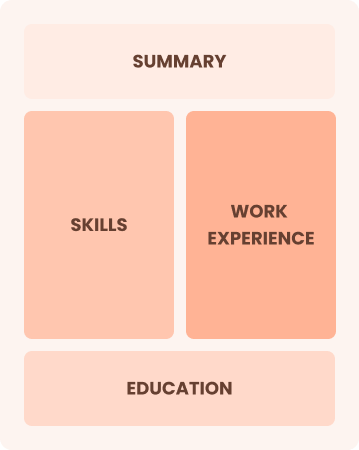

Resume Format Examples

Selecting the ideal resume format is important because it highlights your key skills and experience effectively, helping potential employers quickly understand your professional journey.

Functional

Focuses on skills rather than previous jobs

Best for:

Recent graduates and career changers with up to two years of experience

Combination

Balances skills and work history equally

Best for:

Mid-career consultants looking to demonstrate their skills and growth potential

Chronological

Emphasizes work history in reverse order

Best for:

Seasoned leaders specializing in innovative healthcare solutions

Frequently Asked Questions

Should I include a cover letter with my consultant resume?

Absolutely. Including a cover letter is a fantastic way to highlight your unique qualifications and show recruiters your enthusiasm for the position. It allows you to elaborate on key experiences that may not fully shine through in your resume. For tips on how to write a cover letter, explore our comprehensive guide or use our easy-to-use Cover Letter Generator for quick assistance.

Can I use a resume if I’m applying internationally, or do I need a CV?

When applying for jobs outside the U.S., a CV is often preferred over a resume due to its detailed nature. To ensure your CV meets international standards, explore our resources on how to write a CV and use CV examples that provide guidance on proper formatting and content creation.

What soft skills are important for consultants?

Soft skills such as active listening, adaptability, and effective communication are essential for consultants. These interpersonal skills facilitate strong relationships with clients and team members, enabling better collaboration and more successful project outcomes.

I’m transitioning from another field. How should I highlight my experience?

Highlight your transferable skills like communication, project management, and analytical thinking from previous jobs. These abilities show your readiness to excel as a consultant, even if you lack direct experience in the field. Share specific examples that connect your past successes to key consulting tasks, demonstrating how you can add value from day one.

Should I use a cover letter template?

Yes, using a cover letter template tailored for consultants can significantly improve your application. It provides a clear structure that organizes your industry-specific skills, such as problem-solving and client management, while effectively showcasing relevant achievements to hiring managers.

How do I add my resume to LinkedIn?

To add your resume to LinkedIn, upload it directly to your profile or highlight essential achievements in the "About" and "Experience" sections. This approach helps recruiters easily find qualified applicants like you, increasing your chances of networking and job opportunities.