Hard skills refer to technical abilities, including skill in architectural design software, understanding building codes, and knowledge of construction materials.

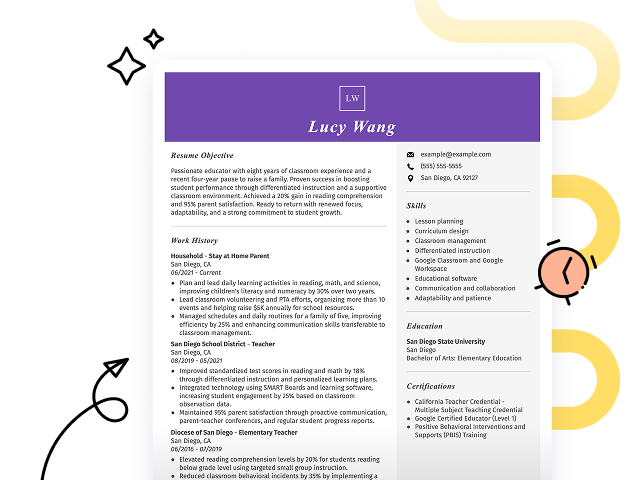

Popular Architect Resume Examples

Discover our top architect resume examples that emphasize key skills such as design innovation, project management, and collaboration. These examples illustrate how to effectively convey your accomplishments to captivate potential employers.

Looking to build an impressive resume? Our Resume Builder offers user-friendly templates specifically designed for architects, helping you create a standout application with ease.

Recommended

Entry-level architect resume

This entry-level resume effectively highlights the job seeker's sustainable design expertise and notable project achievements, including budget management and client satisfaction improvements. New professionals in this field must convey their design skills and project management capabilities through relevant experiences, even if they have limited work history.

Mid-career architect resume

This resume effectively communicates the job seeker's readiness for advanced architect roles by showcasing leadership in large-scale projects and a strong foundation in sustainable design. The clear presentation of accomplishments and skills reflects significant career progression and readiness for complex challenges.

Experienced architect resume

This resume's work history section highlights the applicant's extensive experience as an architect, demonstrating their leadership in redesigning over 50 commercial spaces and achieving a 30% boost in energy efficiency. The clear bullet points improve readability, making it easy for hiring managers to quickly identify key accomplishments.

Resume Template—Easy to Copy & Paste

Li Zhang

Detroit, MI 48214

(555)555-5555

Li.Zhang@example.com

Professional Summary

Dynamic Architect with 4 years of proven expertise in sustainable building design and urban planning, creating high-impact structures that optimize cost and functionality. Skilled in AutoCAD, Revit, and BIM coordination, delivering innovative results for 12M+ projects.

Work History

Architect

ModernArch Design Studio - Detroit, MI

October 2024 - October 2025

- Designed 50+ sustainable buildings in urban areas.

- Redesigned layouts, reducing project costs by 12%.

- Collaborated with 15 stakeholders for unique designs.

Junior Architectural Consultant

Skyline Creations Group - Southgate, MI

October 2022 - September 2024

- Streamlined material usage, cutting costs by 10%.

- Drafted 25+ technical drawings for commercial projects.

- Led design reviews for a 1.5M retail complex project.

Architectural Assistant

Urban Designs Collective - Detroit, MI

October 2021 - September 2022

- Generated 40+ concept designs for residential units.

- Optimized floor plans, increasing efficiency by 20%.

- Conducted site analyses for 5+ renovation projects.

Skills

- Sustainable Building Design

- Urban Planning

- 3D Modeling & Rendering

- Construction Documentation

- Project Management

- AutoCAD & Revit

- BIM Coordination

- LEED Certifications

Education

Master of Architecture Architectural Design

Harvard Graduate School of Design Cambridge, Massachusetts

May 2020

Bachelor of Arts Architecture

University of California, Berkeley Berkeley, California

May 2018

Certifications

- LEED Accredited Professional - U.S. Green Building Council

- Certified Architectural Drafter - National Building Institute

- Project Management Professional (PMP) - Project Management Institute

Languages

- Spanish - Beginner (A1)

- French - Intermediate (B1)

- German - Intermediate (B1)

How to Write an Architect Resume Summary

Your resume summary is the first thing employers will see, so it's important to make a strong impression that highlights your skills as an architect. This section should showcase your design expertise, project management experience, and innovative approach to creating functional spaces.

To give you a clearer picture of what makes an effective summary, here are examples that illustrate both successful and less effective approaches. These examples will guide you in crafting a compelling introduction that stands out:

I am a dedicated architect with years of experience in the industry. I hope to find a position where I can use my skills and contribute to exciting projects. A company that values creativity and offers room for advancement would be perfect for me. I believe I could make a positive impact if given the chance.

- Lacks concrete examples of skills or achievements relevant to architecture, making it vague

- Overly focused on personal desires rather than illustrating what value the job seeker brings to potential employers

- Uses generic phrases instead of specific language that highlights unique strengths or contributions in architectural design

Innovative architect with over 7 years of experience in residential and commercial design, specializing in sustainable and energy-efficient building practices. Successfully led a team that reduced construction costs by 20% while improving project delivery times by 30%. Proficient in AutoCAD, Revit, and Building Information Modeling (BIM) to create cutting-edge designs that meet client needs and regulatory standards.

- Highlights specific experience duration and areas of specialization

- Includes measurable achievements that demonstrate cost savings and efficiency improvements

- Lists relevant technical skills that are critical for architectural roles

Pro Tip

Showcasing Your Work Experience

The work experience section is important for your resume as an architect, as it will contain the bulk of your content. Quality resume templates always emphasize this key area.

This section should be organized in reverse-chronological order, detailing your previous positions. Use bullet points to effectively highlight your achievements and contributions in each architectural role.

To help you craft a compelling work history, we’ll present some examples that illustrate effective entries for architects. These examples will clarify what makes a strong impact and what to steer clear of:

Architect

ABC Design Studio – Los Angeles, CA

- Designed buildings

- Reviewed project plans

- Collaborated with clients and contractors

- Handled administrative duties

- Lacks employment dates to provide context

- Bullet points are too general without showcasing specific skills or achievements

- Emphasis on routine tasks instead of highlighting powerful contributions or results

Architect

GreenSpace Architects – San Francisco, CA

March 2020 - Current

- Led the design of a mixed-use development project, resulting in a 30% increase in usable community space

- Used sustainable materials and practices, achieving LEED Gold certification for multiple projects

- Collaborated with clients to refine architectural concepts, improving client satisfaction ratings by 40%

- Starts each bullet with effective action verbs to highlight achievements

- Incorporates quantifiable metrics to demonstrate the job seeker's contributions

- Showcases relevant skills such as sustainability and client collaboration that are vital for an architect

While your resume summary and work experience are important, don't overlook the importance of other sections. Each part contributes to presenting a well-rounded profile. For additional insights on crafting an effective resume, explore our detailed guide on how to write a resume.

Top Skills to Include on Your Resume

A well-defined skills section is important for an architect's resume. It allows potential employers to quickly assess your qualifications and understand how you can contribute to their projects.

For architects, it's important to showcase both hard skills and soft skills in a resume. Employers are searching for candidates with the required industry knowledge but also soft skills that ensure they can be valuable team members and efficient workers.

On the other hand, soft skills encompass interpersonal qualities such as creativity, communication, and teamwork that promote collaboration and help ensure projects are successfully executed while addressing client needs.

Selecting the right resume skills is essential for aligning with employer expectations and navigating automated screening systems. Many companies use software to filter out job seekers lacking key qualifications, so it's important to present relevant abilities effectively.

To identify which skills to highlight, thoroughly review job postings related to your desired position. These listings often provide valuable insights into what recruiters prioritize, helping you tailor your resume for both human readers and ATS software.

Pro Tip

10 skills that appear on successful architect resumes

Improving your resume by including essential skills can significantly attract the attention of recruiters seeking architects. You can find these skills showcased in our resume examples, helping you apply for positions with greater confidence.

By the way, here are 10 key skills you should consider showcasing on your resume if they align with your qualifications and job requirements:

Creative problem-solving

Attention to detail

Project management

Technical drawing

Building codes knowledge

Sustainable design principles

Collaboration and teamwork

Effective communication

Time management

3D modeling skills

Based on analysis of 5,000+ computer software professional resumes from 2023-2024

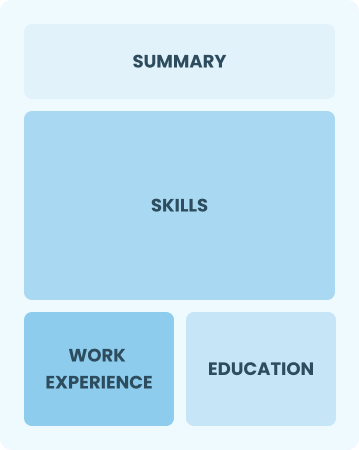

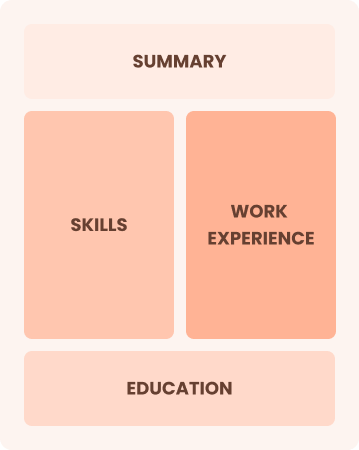

Resume Format Examples

Selecting the appropriate resume format is important for architects, as it showcases your design expertise, project experience, and professional growth in a clear and engaging manner.

Functional

Focuses on skills rather than previous jobs

Best for:

Recent graduates and career changers with up to two years of experience

Combination

Balances skills and work history equally

Best for:

Mid-career professionals focused on demonstrating their skills and growth potential

Chronological

Emphasizes work history in reverse order

Best for:

Seasoned architects leading innovative design projects with strategic vision

Architect Salaries in the Highest-Paid States

Our architect salary data is based on figures from the U.S. Bureau of Labor Statistics (BLS), the authoritative source for employment trends and wage information nationwide.

Whether you're entering the workforce or considering a move to a new city or state, this data can help you gauge what fair compensation looks like for architects in your desired area.

Frequently Asked Questions

Should I include a cover letter with my architect resume?

Absolutely. Including a cover letter is essential as it allows you to showcase your unique qualifications and enthusiasm for the architect role. It can set you apart from other applicants by adding depth to your resume. If you need assistance, explore our guide on how to write a cover letter or use our Cover Letter Generator for quick support.

Can I use a resume if I’m applying internationally, or do I need a CV?

When applying for jobs abroad, use a CV instead of a resume as it is often the preferred format. A CV provides a comprehensive overview of your academic and professional history. Explore our resources on how to write a CV and discover various CV examples to ensure yours meets international expectations.

What soft skills are important for architects?

Soft skills such as communication, collaboration, and problem-solving are essential for architects. These interpersonal skills enable effective teamwork with clients and contractors, fostering strong relationships and ensuring that projects meet both design intentions and client needs.

I’m transitioning from another field. How should I highlight my experience?

When transitioning to an architect role, highlight transferable skills such as creativity, project management, and teamwork. These abilities showcase your versatility and readiness to excel in design and planning. Use concrete examples from your previous jobs to illustrate how these strengths align with architectural tasks and responsibilities. This connection will improve your appeal to potential employers.

Where can I find inspiration for writing my cover letter as a architect?

Aspiring architects can find inspiration in our cover letter examples. These samples offer content ideas, formatting tips, and effective ways to showcase skills and experiences. Use them as a guide to craft unique application materials that stand out in the competitive architecture field.

Should I use a cover letter template?

Yes, using a cover letter template tailored for architects can significantly improve the structure and organization of your content, allowing you to effectively showcase relevant skills such as design skill, project management experience, and teamwork accomplishments that appeal to hiring managers.