Hard skills include expertise in cloud infrastructure, automation with Terraform, and continuous integration/continuous deployment (CI/CD) practices important for effectively managing AWS environments.

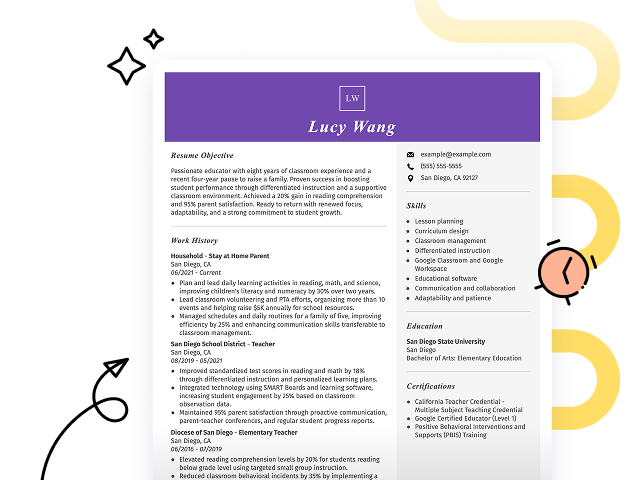

Popular AWS DevOps Engineer Terraform Resume Examples

Discover our top AWS DevOps engineer resume examples that emphasize key skills such as cloud infrastructure management, automation with Terraform, and continuous integration. These resumes demonstrate how to effectively showcase your technical expertise to potential employers.

Ready to build your ideal resume? Our Resume Builder offers user-friendly templates designed specifically for tech professionals, helping you make a lasting impression in your job applications.

Recommended

Entry-level AWS DevOps engineer Terraform resume

This entry-level resume effectively highlights the applicant's technical skills in AWS cloud architecture and automation, showcasing significant achievements while working as an AWS DevOps engineer. New professionals in this field must demonstrate their skill in relevant technologies and the ability to improve operational efficiencies, even with limited hands-on experience.

Mid-career AWS DevOps engineer Terraform resume

This resume clearly presents key qualifications by highlighting significant achievements in AWS and Terraform. The structured layout illustrates the applicant's growth into leadership roles, showcasing their readiness to tackle complex DevOps challenges while driving efficiency and innovation.

Experienced AWS DevOps engineer Terraform resume

This resume's work history section demonstrates the applicant's extensive experience as an AWS DevOps engineer, highlighting their success in automating 75% of deployment processes and reducing server costs by 20%. The clear bullet points improve readability, making it easy for hiring managers to identify key achievements.

Resume Template—Easy to Copy & Paste

Suki Davis

Los Angeles, CA 90021

(555)555-5555

Suki.Davis@example.com

Professional Summary

AWS DevOps Engineer specializing in Terraform, with five years of experience in designing, automating, and optimizing cloud solutions. Proven record in cost reduction and streamline operations. Expert in CI/CD, containerization, and cloud scalability.

Work History

AWS DevOps Engineer Terraform

Cloud Solutions Corp - Los Angeles, CA

June 2023 - October 2025

- Deployed 150+ AWS resources using Terraform.

- Reduced infrastructure costs by 20% in one year.

- Automated 95% of operational tasks for efficiency.

Cloud Infrastructure Engineer

Tech Innovations Inc - San Francisco, CA

June 2021 - May 2023

- Migrated 75% of apps to AWS cloud platform.

- Enhanced system uptime to 99.97% annually.

- Implemented CI/CD pipelines boosting deployment by 40%.

DevOps Specialist

Quantum Technologies - Riverside, CA

June 2020 - May 2021

- Improved deployment speed by 30% using Jenkins.

- Configured and maintained over 100+ servers.

- Optimized resource allocation, saving 100K annually.

Skills

- AWS Cloud Architecture

- Terraform

- CI/CD Pipelines

- Automation

- Scripting (Python, Bash)

- Infrastructure as Code

- Containerization (Docker)

- Monitoring and Logging

Certifications

- AWS Certified DevOps Engineer - Professional - Amazon Web Services

- Terraform Associate - HashiCorp

Education

Master of Science Computer Science

Stanford University Stanford, California

May 2020

Bachelor of Engineering Information Technology

University of California, Berkeley Berkeley, California

May 2018

Languages

- Spanish - Beginner (A1)

- German - Beginner (A1)

- Mandarin - Beginner (A1)

How to Write a AWS DevOps Engineer Terraform Resume Summary

Your resume summary is the first impression employers will have of you, making it important to present your qualifications clearly and compellingly. As an AWS DevOps engineer specializing in Terraform, you should emphasize your skills in cloud deployment, infrastructure automation, and collaboration within cross-functional teams. The following summary examples will illustrate effective ways to showcase your expertise and help you identify what resonates with hiring managers:

I am an experienced AWS DevOps engineer with skills in managing cloud infrastructure and automation. I want to find a position where I can use my knowledge of Terraform and improve the company’s projects. A role that allows for personal growth and flexibility is what I am looking for, as I believe it would help me contribute more effectively.

- Lacks specific achievements or measurable outcomes related to AWS and Terraform expertise

- Focuses on personal desires rather than emphasizing contributions to potential employers

- Uses generic phrases that do not clearly demonstrate unique qualifications or value

Results-driven AWS DevOps Engineer with 4+ years of experience in cloud infrastructure management and automation, specializing in infrastructure as code using Terraform. Improved deployment efficiency by 30% through the implementation of CI/CD pipelines and reduced system downtime by 25% via proactive monitoring solutions. Proficient in Docker, Kubernetes, and AWS services including EC2 and S3.

- Starts with a clear indication of experience level and specific areas of expertise

- Highlights quantifiable achievements that demonstrate significant improvements in operational efficiency

- Lists relevant technical skills that are critical for success in DevOps roles

Pro Tip

Showcasing Your Work Experience

The work experience section is the centerpiece of your resume as an AWS DevOps engineer specializing in Terraform. This is where you'll provide most of your content, and it's a must-have in any well-structured resume template.

Your work history should be listed in reverse-chronological order, with bullet points highlighting key achievements and contributions to past roles. Focus on how you've used AWS and Terraform to drive success.

To help you craft a strong work experience section, we'll show you examples illustrating what works effectively—and what doesn't—for an AWS DevOps engineer with Terraform expertise:

AWS DevOps Engineer

Tech Innovations Inc. – Austin, TX

- Managed cloud infrastructure

- Automated deployment processes

- Collaborated with development teams

- Ensured system performance and security

- Lacks specific achievements or metrics related to cloud management

- Bullet points are too broad and do not highlight unique skills

- Focuses on general responsibilities rather than powerful results

AWS DevOps Engineer

Tech Innovations Inc. – Austin, TX

March 2020 - Present

- Automated infrastructure deployment using Terraform, reducing provisioning time by 40%

- Implemented CI/CD pipelines that increased deployment frequency by 30%, improving the team's productivity

- Collaborated with development teams to optimize cloud architecture, resulting in a 20% reduction in operational costs

- Each bullet starts with a powerful action verb, clearly detailing the job seeker's contributions

- Quantifiable results demonstrate the impact of the job seeker's work on team efficiency and cost savings

- Highlights key skills relevant to AWS and DevOps practices, showcasing the job seeker's expertise

While the resume summary and work experience are important components of your resume, don't overlook other sections that can improve your application. For detailed insights on crafting a standout resume, check out our comprehensive guide on how to write a resume.

Top Skills to Include on Your Resume

A well-defined skills section is important for any resume, as it allows you to succinctly showcase your qualifications. This part of your resume captures the attention of hiring managers and demonstrates that you possess the necessary competencies for the role.

Hiring managers seek candidates who bring both specialized knowledge and strong people skills to the table. Highlighting a mix of hard and soft skills on your resume shows you can deliver results while working effectively with others.

Soft skills involve strong communication, teamwork, and problem-solving abilities that are essential for collaborating with cross-functional teams and ensuring seamless project execution.

When selecting resume skills, it's important to align them with what employers seek in their ideal applicants. Many organizations use automated systems that filter out job seekers lacking essential skills for the role.

To tailor your resume effectively, review job postings closely to gain insight into which skills are most relevant. Highlighting these key qualifications will not only grab recruiters' attention but also help ensure your resume successfully passes through ATS filters.

Pro Tip

10 skills that appear on successful AWS DevOps engineer Terraform resumes

Improve your resume to capture recruiters' attention by including essential skills sought after for AWS DevOps engineer positions. You can find examples of these skills in our resume examples, allowing you to apply with the assurance that a polished resume provides.

By the way, consider integrating relevant skills from this list into your resume based on your qualifications and job requirements:

Terraform

Continuous integration/continuous deployment (CI/CD)

Cloud architecture design

Scripting languages (Python, Bash)

Monitoring and logging tools

Container orchestration (Kubernetes, Docker)

Version control systems (Git)

Infrastructure as code

Automation frameworks

Collaboration and communication skills

Based on analysis of 5,000+ engineering professional resumes from 2023-2024

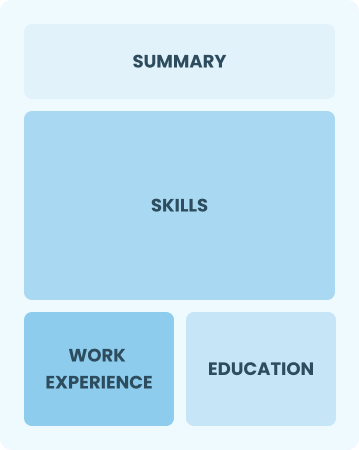

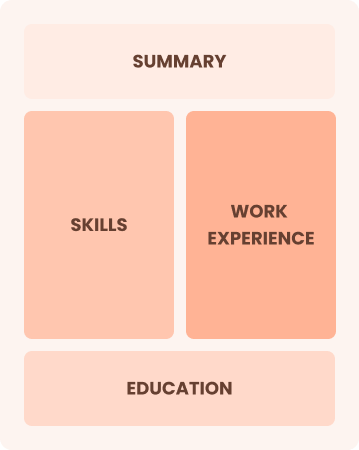

Resume Format Examples

Choosing the right resume format is important for an AWS DevOps engineer using Terraform, as it showcases your technical expertise and project experience in a clear, compelling way.

Functional

Focuses on skills rather than previous jobs

Best for:

Recent graduates and career changers with up to two years of experience

Combination

Balances skills and work history equally

Best for:

Mid-career professionals focused on demonstrating their technical skills and growth potential

Chronological

Emphasizes work history in reverse order

Best for:

Experts leading high-impact, scalable AWS DevOps initiatives with Terraform

Frequently Asked Questions

Should I include a cover letter with my AWS DevOps engineer Terraform resume?

Absolutely. Including a cover letter is a great way to highlight your unique qualifications and show genuine interest in the position. It allows you to elaborate on your skills and experiences that align with the job. For assistance, consider exploring our resources on how to write a cover letter or try the Cover Letter Generator for quick help.

Can I use a resume if I’m applying internationally, or do I need a CV?

When applying for jobs internationally, a CV is often preferred over a resume, particularly in Europe and Asia. Explore our resources that include CV examples and tips tailored to global standards for guidance on proper CV formatting and creation. If you're unsure how to write a CV, our detailed instructions can help.

What soft skills are important for AWS DevOps engineers?

Soft skills like communication, problem-solving, and collaboration are essential for AWS DevOps engineers using Terraform. These interpersonal skills facilitate effective teamwork and ensure smooth project execution, helping to bridge the gap between development and operations while fostering a positive work environment.

I’m transitioning from another field. How should I highlight my experience?

Highlight your transferable skills such as teamwork, adaptability, and technical skill when applying for AWS DevOps engineer positions. These abilities show your potential to excel in cloud environments, even if your previous experience is in a different field. Share concrete examples that demonstrate how you've tackled challenges or streamlined processes to align with the demands of the role.

How should I format a cover letter for a AWS DevOps engineer job?

To format a cover letter, start by including your name and contact details at the top. Next, add a professional greeting followed by an introduction designed to capture attention. Incorporate a concise summary of your relevant skills that align with the job description for the AWS DevOps engineer role. Conclude with a strong closing statement that invites further discussion about your application.

Should I use a cover letter template?

Certainly! Here's a rewritten version of your content with the required markdown link:

Using a cover letter template for an AWS DevOps engineer specializing in Terraform ensures your application is well-structured and highlights key skills like infrastructure automation and cloud optimization, making it easier for hiring managers to see your strengths.