Hard skills for an Informatica developer include expertise in ETL processes, data integration, and skill in SQL and data modeling techniques.

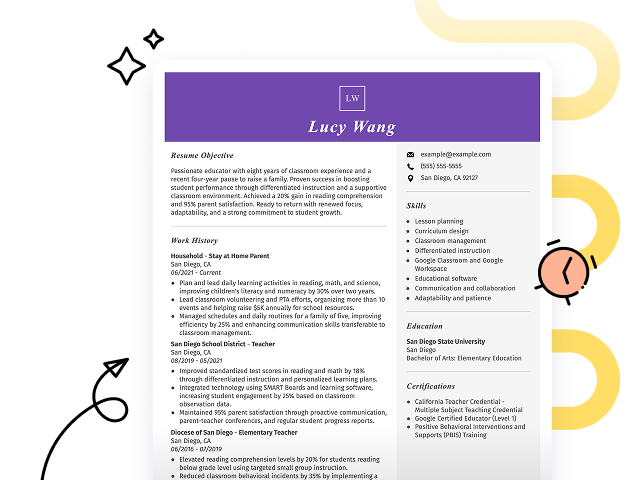

Popular Informatica Developer Resume Examples

Check out our top Informatica developer resume examples that demonstrate key skills such as ETL processes, data warehousing, and system integration. These examples will help you effectively showcase your expertise to potential employers.

Ready to build your ideal resume? Our Resume Builder offers user-friendly templates specifically designed for tech professionals, making it simple to create a standout document.

Recommended

Entry-level Informatica developer resume

This entry-level resume effectively highlights the job seeker's strong foundation in ETL development and data integration. It emphasizes significant achievements like reducing data processing time and increasing system efficiency. New professionals in this field should demonstrate their technical skills, ability to optimize processes, and potential for contributing to organizational success despite limited experience.

Mid-career Informatica developer resume

This resume effectively showcases the job seeker's qualifications by highlighting significant achievements and technical skills. The clear structure demonstrates a strong career trajectory, positioning them as a capable Informatica developer ready for advanced responsibilities and leadership opportunities.

Experienced Informatica developer resume

This resume's work history section exemplifies the applicant's strong expertise as an Informatica Developer, highlighting significant achievements such as boosting data processing by 30% and improving accuracy by 20%. The bullet points clearly outline accomplishments, making it easy for hiring managers to assess qualifications quickly.

Resume Template—Easy to Copy & Paste

Michael Rodriguez

Dallas, TX 75204

(555)555-5555

Michael.Rodriguez@example.com

Professional Summary

Dynamic Informatica Developer with 5 years of experience in ETL, data warehousing, and enhancing data processing efficiency. Proven track record of improving workflows and achieving data ecosystem excellence.

Work History

Informatica Developer

TechFusion Incorporated - Dallas, TX

May 2023 - October 2025

- Improved data processing efficiency by 30%

- Developed 100+ ETL jobs for data migration

- Optimized workflows reducing runtime by 25%

ETL Engineer

Data Dynamics Solutions - Austin, TX

May 2021 - April 2023

- Increased data pipeline reliability by 40%

- Managed database ETL processes for 50TB+ data

- Reduced data inconsistencies by 15%

Business Intelligence Analyst

Insight Analytics Corp - Pinehill, TX

May 2020 - April 2021

- Boosted report generation speed by 20%

- Built BI dashboards for 75% user satisfaction

- Analyzed business queries reducing errors by 10%

Languages

- Spanish - Beginner (A1)

- French - Intermediate (B1)

- German - Beginner (A1)

Skills

- ETL Development

- Data Warehousing

- Informatica PowerCenter

- SQL Programming

- Data Analysis

- Workflow Optimization

- Performance Tuning

- Problem Solving

Certifications

- Certified Informatica Developer - Informatica University

- ETL Architecture Certified - Data Architecture Institute

Education

April 2020

University of California, Berkeley Berkeley, California

Bachelor's Information Technology

Stanford University Stanford, California

May 2018

How to Write an Informatica Developer Resume Summary

Your resume summary is the first thing employers will notice, so it’s important to make it powerful. As an Informatica Developer, you should highlight your technical expertise and experience in data integration and ETL processes.

In this role, showcasing your skill with Informatica tools and your problem-solving skills can set you apart from the competition. Focus on relevant projects or accomplishments that demonstrate your value to potential employers.

To guide you in crafting an effective summary, consider these examples that illustrate what works well and what to avoid:

I am an Informatica developer with several years of experience. I am looking for a challenging role where I can apply my skills and help the company succeed. A position that offers a good work-life balance and chances to advance would be great for me. I believe I could contribute positively to your team if given the chance.

- Lacks specific details about relevant skills and achievements in Informatica development

- Overuses personal language, making it feel less professional and more generic

- Emphasizes what the applicant seeks rather than highlighting their unique contributions to potential employers

Results-driven Informatica Developer with over 6 years of experience in data integration and ETL processes. Successfully optimized data processing time by 30% through the implementation of efficient mapping strategies, significantly improving operational efficiency. Proficient in SQL, PL/SQL, and working with large datasets to deliver actionable insights that support business decision-making.

- Starts with clear experience duration and specialization in data integration

- Highlights a quantifiable achievement that illustrates significant improvement in process efficiency

- Mentions relevant technical skills like SQL and PL/SQL which are important for Informatica roles

Pro Tip

Showcasing Your Work Experience

The work experience section is important for your resume as an Informatica developer, where you will present the bulk of your qualifications. Good resume templates always prioritize this section to ensure it stands out.

This portion of your resume should be organized in reverse-chronological order, detailing your previous positions. Use bullet points to effectively highlight your key achievements and contributions in each role.

We’ll now look at a couple of examples that illustrate what a strong work history looks like for Informatica developers. These examples will show you what works well and what to avoid:

Informatica Developer

Tech Solutions Inc. – New York, NY

- Worked on data integration tasks

- Collaborated with team members

- Managed databases and ETL processes

- Assisted with troubleshooting issues

- Lacks specific project details and outcomes

- Bullet points do not highlight personal contributions or successes

- Describes routine duties rather than showcasing strong achievements

Informatica Developer

Tech Solutions Inc. – San Francisco, CA

March 2020 - Present

- Develop ETL processes using Informatica to extract data from various sources, improving data accuracy by 30%

- Collaborate with business analysts to design and implement data warehousing solutions, resulting in a 40% decrease in report generation time

- Lead a team of junior developers in best practices for data integration, improving project delivery timelines by 25%

- Starts each bullet with dynamic action verbs that clearly define the applicant's contributions

- Presents quantifiable achievements that showcase the impact of the job seeker's work

- Highlights relevant skills such as collaboration and leadership while detailing specific accomplishments

While your resume summary and work experience are important components, don't overlook the importance of other sections that also deserve careful formatting. For further insights on crafting a standout resume, be sure to check out our comprehensive guide on how to write a resume.

Top Skills to Include on Your Resume

A well-defined skills section is important for any effective resume. It allows you to showcase your qualifications and makes it easy for employers to see if you fit the role at a glance.

As an Informatica developer, highlight both your technical skills and analytical abilities. Include expertise in ETL processes, skill with Informatica PowerCenter, and familiarity with SQL databases to demonstrate your capability in handling data integration tasks effectively.

Soft skills are also essential, such as problem-solving abilities and effective communication, which foster collaboration within teams and ensure successful project outcomes.

Selecting the right resume skills is important for standing out to potential employers. Many organizations use automated screening systems that filter job seekers based on these essential skills, so aligning your qualifications with employer expectations is key.

For highlighting the most relevant skills, take time to review job postings. They often provide insight into the specific abilities recruiters prioritize, helping your resume make a stronger impression and pass ATS scans more effectively.

Pro Tip

10 skills that appear on successful Informatica developer resumes

Highlighting essential skills in your resume can significantly attract the attention of recruiters looking for Informatica developers. You can find these skills exemplified in our resume examples, which will empower you to apply for positions with renewed confidence.

Here are 10 skills that you should think about including in your resume if they align with your experience and job requirements:

Data integration

ETL processes

SQL skills

Problem-solving abilities

Analytical thinking

Knowledge of data warehousing

Experience with cloud platforms

Attention to detail

Collaboration and teamwork

Project management

Based on analysis of 5,000+ information technology professional resumes from 2023-2024

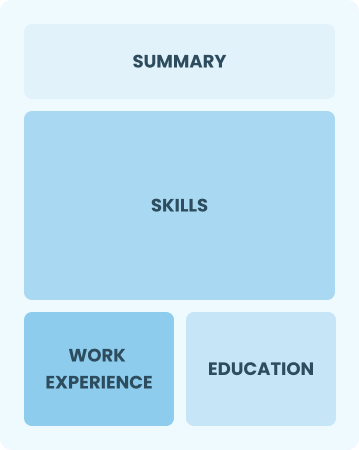

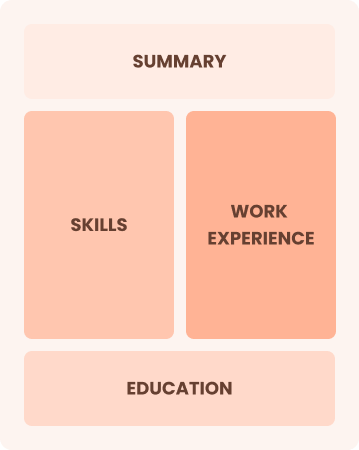

Resume Format Examples

Selecting the appropriate resume format is important for an Informatica developer because it highlights your technical expertise, relevant projects, and career growth in a clear and strong way.

Functional

Focuses on skills rather than previous jobs

Best for:

Recent graduates and career changers with minimal experience in data integration

Combination

Balances skills and work history equally

Best for:

Mid-career professionals eager to demonstrate their skills and pursue growth opportunities

Chronological

Emphasizes work history in reverse order

Best for:

Seasoned experts leading complex data integration projects

Frequently Asked Questions

Should I include a cover letter with my Informatica developer resume?

Absolutely, including a cover letter is key to making a strong impression on employers. It allows you to highlight your skills and passion for the position in a personal way. If you're looking for help crafting yours, check out our helpful resources like how to write a cover letter or use our Cover Letter Generator for quick assistance.

Can I use a resume if I’m applying internationally, or do I need a CV?

When applying for jobs abroad, use a CV instead of a resume, as many countries favor this format. To help you craft an effective CV, we offer resources that provide guidance on how to write a CV with proper formatting and content creation tailored to international standards. Additionally, explore our CV examples for more inspiration.

What soft skills are important for Informatica developers?

Soft skills such as communication, problem-solving, and teamwork are essential for an Informatica developer. These interpersonal skills facilitate collaboration with colleagues and stakeholders, ensuring successful project delivery and fostering a positive work environment.

I’m transitioning from another field. How should I highlight my experience?

Highlight your transferable skills, such as analytical thinking, teamwork, and project management. Even if your previous roles were outside of IT, these abilities can demonstrate your potential value as an Informatica developer. Use concrete examples from past projects to illustrate how you’ve successfully tackled challenges and contributed to team goals in relevant ways.

How should I format a cover letter for a Informatica developer job?

To format a cover letter for an Informatica Developer position, start with your contact details followed by a professional greeting. An engaging introduction that highlights your interest in the role should come next, and then you can summarize your relevant skills and experiences. Tailor each section to reflect the job requirements, and conclude with a strong closing statement that encourages further communication.

How do I add my resume to LinkedIn?

To increase your resume's visibility on LinkedIn, add your resume to LinkedIn by uploading it to your profile or highlighting essential skills and projects in the "About" and "Experience" sections. This approach helps recruiters find qualified Informatica developers like you more easily, improving your chances for job opportunities.