Hard skills like programming in Ab Initio, data integration techniques, and performance tuning are essential for an Ab Initio developer to build efficient data pipelines.

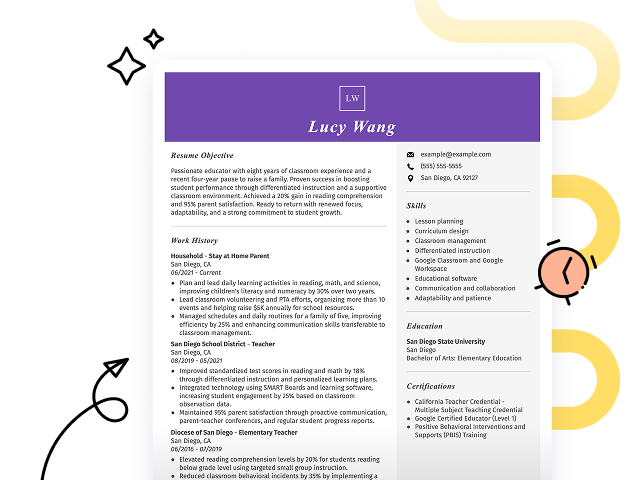

Popular Ab Initio Developer Resume Examples

Check out our top Ab Initio developer resume examples that emphasize critical skills such as data integration, ETL processes, and software development. These examples will help you showcase your experience effectively to potential employers.

Looking to build your own impressive resume? Our Resume Builder offers user-friendly templates specifically designed for tech professionals, making it simple to create a standout application.

Recommended

Entry-level Ab Initio developer resume

This entry-level resume for an Ab Initio Developer effectively highlights the applicant's technical skills and achievements, showcasing their ability to optimize ETL processes and develop reusable components. New professionals in this field must demonstrate not only their technical competencies but also their problem-solving capabilities and collaborative experiences that can add value to potential employers.

Mid-career Ab Initio developer resume

This resume showcases the applicant's robust skill set and accomplishments in data integration, clearly illustrating their readiness for advanced roles. The emphasis on leadership in projects and quantifiable results highlights a trajectory of growth and capability to tackle complex challenges in the field.

Experienced Ab Initio developer resume

The work history highlights the applicant's expertise in optimizing ETL workflows, achieving a 25% improvement. The clear bullet format effectively showcases key achievements like reducing project timelines by 10%, making it ideal for tech roles requiring precision.

Resume Template—Easy to Copy & Paste

David Liu

Chicago, IL 60607

(555)555-5555

David.Liu@example.com

Skills

- ETL Development

- Ab Initio Graph Design

- Data Integration

- Performance Optimization

- Data Validation

- SQL Query Writing

- Big Data Frameworks

- Cloud Data Migration

Languages

- Spanish - Beginner (A1)

- French - Intermediate (B1)

- German - Beginner (A1)

Professional Summary

Highly skilled Ab Initio Developer with 6+ years of expertise in ETL development, data integration, and performance optimization. Strong background in creating scalable, efficient workflows with measurable results. Proficient in Ab Initio, SQL, and cloud data migration.

Work History

Ab Initio Developer

TechWave Solutions - Chicago, IL

January 2023 - December 2025

- Optimized ETL workflows improving job performance by 25%.

- Developed 10+ complex data processing graphs in Ab Initio.

- Collaborated with teams to reduce data processing errors by 30%.

ETL Consultant

DataInspire Tech - Chicago, IL

July 2019 - December 2022

- Streamlined ETL pipeline reducing data load time by 15%.

- Migrated data from legacy systems to cloud-based platforms.

- Improved data accuracy by implementing validation protocols.

Data Integration Analyst

Fusion Data Systems - Springfield, IL

July 2017 - June 2019

- Designed data integration solutions with 99.9% uptime.

- Collaborated with engineers to scale ETL processes by 20%.

- Monitored and resolved daily production job errors efficiently.

Certifications

- Ab Initio Certified Developer - Ab Initio Corporation

- Certified Data Integration Specialist - DataCert Academy

- Advanced ETL Programming Certification - ETL Academy

Education

Master of Science Information Systems

University of California, Berkeley Berkeley, California

May 2017

Bachelor of Science Computer Science

University of Texas at Austin Austin, Texas

May 2015

How to Write a Ab Initio Developer Resume Summary

Your resume summary is the first thing employers will see, making it essential to craft a compelling introduction that captures their attention. As an Ab Initio developer, you should highlight your programming skills and problem-solving abilities that align with the role's demands. The examples provided will showcase effective approaches and common pitfalls in crafting your summary:

I am an Ab Initio developer with experience in various projects. I am looking for a position that allows me to use my skills effectively and grow professionally. A company that supports teamwork and innovation would be perfect for me. I believe I can contribute positively if given the chance.

- Contains vague phrases about experience without specific achievements or technologies

- Overuses personal pronouns, which detracts from professionalism

- Emphasizes what the applicant seeks rather than highlighting their unique contributions to potential employers

Detail-oriented Ab Initio developer with 4+ years of experience in data integration and application development. Successfully increased data processing efficiency by 30% through optimized coding practices and improved ETL processes. Proficient in SQL, Unix scripting, and using Ab Initio for complex data transformations to support business intelligence initiatives.

- Clearly states the job seeker's experience level and specific area of expertise

- Highlights a quantifiable achievement that showcases significant impact on operational efficiency

- Mentions relevant technical skills that align with job requirements for an Ab Initio developer

Pro Tip

Showcasing Your Work Experience

The work experience section is important for your resume as an Ab Initio developer, containing the bulk of your content. Resume templates always emphasize this key area to showcase your professional history.

This section should be organized in reverse-chronological order, clearly listing your previous roles. Use bullet points to highlight specific achievements and contributions made in each position.

To illustrate effective work history entries for Ab Initio developers, we’ll provide a couple of examples that demonstrate best practices and common pitfalls:

Ab Initio Developer

Tech Solutions Inc. – San Francisco, CA

- Developed software applications.

- Participated in meetings.

- Collaborated with team members.

- Tested code and fixed bugs.

- Lacks specific details about projects worked on

- Bullet points describe basic responsibilities without emphasizing achievements

- Does not highlight any measurable impact or results from the work done

Ab Initio Developer

Tech Solutions Inc. – New York, NY

March 2020 - Present

- Develop and optimize ETL processes using Ab Initio, improving data processing time by 30%.

- Collaborate with data architects to design robust data integration solutions, improving overall system performance and reliability.

- Mentor junior developers on best practices in Ab Initio, fostering a culture of continuous learning and skill development.

- Starts each bullet with strong action verbs that showcase the applicant's contributions

- Incorporates quantifiable results to highlight effectiveness and impact

- Demonstrates relevant technical skills while emphasizing teamwork and mentorship

While your resume summary and work experience are important, don’t overlook the importance of other sections that contribute to a well-rounded presentation. For more insights on crafting each part of your resume effectively, be sure to explore our comprehensive guide on how to write a resume.

Top Skills to Include on Your Resume

A well-crafted skills section is vital for any effective resume as it highlights your qualifications and makes a strong first impression. This concise overview not only showcases what you bring to the table but also aids employers in quickly identifying suitable applicants.

For hiring managers, this section serves as a quick reference point to evaluate whether applicants meet essential criteria. It allows job seekers, especially Ab Initio developer professionals, to directly align their capabilities with job requirements by presenting both technical and interpersonal skills, which will be detailed further below.

Soft skills for an Ab Initio developer include teamwork, problem-solving, and effective communication because these abilities foster collaboration and improve project outcomes in technical environments.

When selecting your resume skills, it's important to align them with what employers expect. Many organizations use automated systems to filter out job seekers who lack the essential skills for the position.

To improve your chances, carefully review job postings for insights on which skills are most valued by recruiters and ATS systems alike. This approach will help you emphasize the right abilities and stand out in a competitive job market.

Pro Tip

10 skills that appear on successful Ab Initio developer resumes

Highlighting essential skills in your resume can significantly increase your chances of catching a recruiter's eye. For Ab Initio developers, these high-demand skills are important, and you can see how they are effectively showcased in our resume examples, making it easier to land interviews.

By the way, consider incorporating relevant skills from the following list that align with your experience and job specifications:

Skill in Ab Initio

Data modeling expertise

ETL process knowledge

Performance tuning abilities

Strong analytical skills

Problem-solving capabilities

Attention to detail

Effective communication skills

Team collaboration experience

Ability to work under tight deadlines

Based on analysis of 5,000+ web development professional resumes from 2023-2024

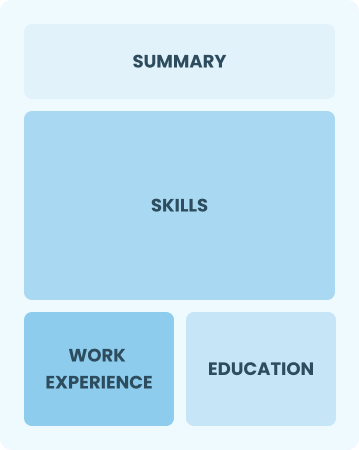

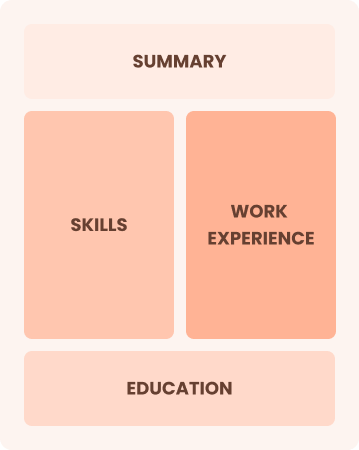

Resume Format Examples

Selecting the appropriate resume format is important for an Ab Initio developer, as it showcases your technical expertise and relevant project experience, making your career progression clear to potential employers.

Functional

Focuses on skills rather than previous jobs

Best for:

Recent graduates and career changers with up to two years of experience

Combination

Balances skills and work history equally

Best for:

Mid-career develops focused on showing their skills and seeking growth opportunities

Chronological

Emphasizes work history in reverse order

Best for:

Seasoned developers leading complex Ab Initio projects

Frequently Asked Questions

Should I include a cover letter with my Ab Initio developer resume?

Absolutely, including a cover letter can greatly improve your application by showcasing your enthusiasm and providing deeper insights into your qualifications. It allows you to connect with potential employers on a personal level. For tips on crafting a compelling cover letter, take a look at our how to write a cover letter resources or use our Cover Letter Generator for quick assistance.

Can I use a resume if I’m applying internationally, or do I need a CV?

When applying for jobs abroad, a CV is often more appropriate than a resume. A CV provides a comprehensive overview of your academic background and professional experience. For guidance on how to write a CV effectively, explore our resources that offer templates and tips tailored for international applications. Additionally, reviewing CV examples can provide valuable insight into structuring your document.

What soft skills are important for Ab Initio developers?

Soft skills such as problem-solving, collaboration, and adaptability are important for an Ab Initio developer. These interpersonal skills foster effective teamwork and help you navigate challenges in projects, ensuring smooth communication with colleagues and stakeholders while improving project outcomes.

I’m transitioning from another field. How should I highlight my experience?

When applying for Ab Initio developer roles, highlight transferable skills like analytical thinking, teamwork, and project management. These abilities demonstrate your adaptability and potential to succeed in the tech field, even if you have limited experience. Use specific instances from your past work to show how these skills can improve software development and problem-solving.

Where can I find inspiration for writing my cover letter as a Ab Initio developer?

For aspiring Ab Initio developers, exploring professionally crafted cover letter examples can be invaluable. These samples provide great inspiration for content ideas, formatting tips, and effective ways to showcase your skills and qualifications to potential employers.

Should I include a personal mission statement on my Ab Initio developer resume?

Yes, including a personal mission statement on your resume is recommended. It effectively conveys your core values and career aspirations. This approach is especially compelling when applying to organizations that prioritize cultural fit or have a strong commitment to their mission and vision.