Hard skills are technical, measurable abilities like database management, network configuration, and data security protocols essential for maintaining efficient healthcare systems.

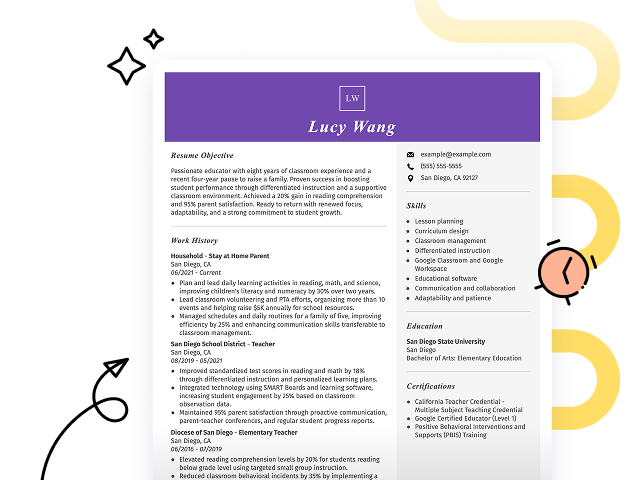

Popular Data Systems Administration Resume Examples

Check out our top data systems administration resume examples that emphasize important skills like database management, system optimization, and technical troubleshooting. These examples demonstrate how to effectively highlight your accomplishments in a competitive field.

Looking to build your ideal resume? Our Resume Builder offers user-friendly templates specifically designed for data professionals, helping you showcase your expertise with ease.

Recommended

Entry-level data systems administration resume

This entry-level resume effectively highlights the job seeker's practical experience in data systems administration, showcasing significant achievements such as optimizing database performance and implementing security measures. New professionals in this field must demonstrate their technical skills and relevant certifications to employers, illustrating their readiness for the demands of a data systems administration role despite limited work history.

Mid-career data systems administration resume

This resume effectively showcases qualifications through quantified achievements and relevant experience. The clear progression from systems support to data systems administration reflects a strong capability for leadership and tackling complex challenges in technology.

Experienced data systems administration resume

The work history section demonstrates the applicant's extensive experience in data systems administration, highlighting their ability to manage databases for over 300 clients and achieve a 20% reduction in downtime. The clear formatting allows hiring managers to quickly assess key accomplishments and skills relevant to potential roles.

Resume Template—Easy to Copy & Paste

Jane Huang

Eastside, WA 98001

(555)555-5555

Jane.Huang@example.com

Skills

- System Administration

- Database Management

- Cloud Computing

- Network Security

- Data Analysis

- Performance Optimization

- IT Support Services

- Automation

Languages

- Spanish - Beginner (A1)

- German - Beginner (A1)

- French - Intermediate (B1)

Professional Summary

Seasoned Data Systems Administrator with expertise in optimizing system performance and data integrity. Proficient in cloud computing and network security, enhancing operational efficiency with quantifiable success.

Work History

Data Systems Administrator

Innovate Analytics Inc. - Eastside, WA

January 2024 - October 2025

- Enhanced system uptime by 30%

- Managed data integrity for 50+ assets

- Coordinated IT support, reducing downtime

IT System Specialist

TechWave Solutions - Tacoma, WA

February 2022 - January 2024

- Optimized network speed by 20%

- Reduced server costs by 10K annually

- Automated backup processes

Database Administrator

Data Stream Insights - Tacoma, WA

January 2020 - January 2022

- Increased query efficiency by 25%

- Managed 100+ database systems

- Implemented security protocols

Certifications

- Certified System Administrator - TechCert Institute

- Data Protection Officer Training - DataSec Academy

Education

Master's Information Technology

University of Washington Seattle, WA

June 2019

Bachelor's Computer Science

Seattle Tech College Seattle, WA

June 2018

How to Write a Data Systems Administration Resume Summary

Your resume summary is the first thing employers will notice, making it essential to create a strong impression that showcases your qualifications. As a data systems administrator, you should emphasize your technical skills, problem-solving abilities, and experience with system management.

Highlight your skill in maintaining data integrity and ensuring system functionality. This role demands attention to detail and a commitment to optimizing processes for efficiency.

To guide you in crafting an effective summary, consider the following examples that illustrate what works well and what might fall short:

I am a data systems administrator with many years of experience in IT. I want to find a job where I can use my skills and help improve the company’s technology. Having a supportive workplace that promotes career advancement is very important to me. I believe I can add value to your team if given the chance.

- Lacks specific details about technical skills or achievements relevant to data systems administration

- Overuses personal pronouns and vague language, making it sound generic rather than effective

- Emphasizes personal desires for the role instead of highlighting what unique contributions the applicant can make to the organization

Results-driven data systems administrator with over 7 years of experience managing enterprise-level databases and optimizing data workflows. Achieved a 30% increase in system efficiency by implementing automated processes and improving database performance monitoring. Proficient in SQL, Oracle, and cloud-based solutions, alongside strong problem-solving skills to ensure data integrity and security.

- Begins with specific years of experience and focus on key responsibilities

- Highlights quantifiable achievements that demonstrate impact on operational efficiency

- Mentions relevant technical skills that align with industry requirements for data systems administration

Pro Tip

Showcasing Your Work Experience

The work experience section is important for your resume as a data systems administrator. This part of the document will hold the bulk of your content, and effective resume templates always prioritize this section.

In this area, list your previous positions in reverse-chronological order. Use bullet points to detail your key achievements and contributions in each role, making it easy for hiring managers to spot relevant experience.

To help you refine your approach, we’ve included a couple of examples that illustrate successful work history entries. These examples will clarify what works well and what pitfalls to avoid:

Data Systems Administrator

Tech Solutions Inc. – San Francisco, CA

- Managed data systems and servers

- Performed routine maintenance tasks

- Worked with IT team members on projects

- Assisted users with technical issues

- Lacks specific achievements or projects completed during employment

- Bullet points are vague and do not highlight unique skills or contributions

- No mention of any measurable results or improvements made

Data Systems Administrator

Tech Solutions Inc. – Austin, TX

March 2020 - Current

- Manage and optimize data storage solutions for over 500 users, improving access speed by 30%

- Design and implement backup protocols that reduce data loss incidents by 40% year-over-year

- Collaborate with IT teams to improve system security measures, achieving a 50% decrease in unauthorized access attempts

- Starts each bullet point with powerful action verbs that clearly showcase the applicant’s contributions

- Incorporates specific metrics to quantify achievements, making accomplishments concrete and relatable

- Highlights critical skills relevant to the role, demonstrating the job seeker’s value in a technical environment

While your resume summary and work experience are important, don’t overlook the importance of other sections. Each part plays a role in showcasing your qualifications. For detailed guidance on crafting an effective resume, review our comprehensive guide on how to write a resume.

Top Skills to Include on Your Resume

A well-defined skills section is important for any strong resume, allowing you to showcase your abilities clearly and efficiently. This section helps employers quickly identify if you possess the essential qualifications they seek for the role.

Be sure to include a mix of hard and soft skills to make your resume stronger. Highlight technical abilities with SQL databases, cloud platforms such as AWS or Azure, and software like Docker or Kubernetes, along with problem-solving and organizational skills that support efficient and reliable system administration.

Soft skills are interpersonal qualities such as problem-solving, communication, and teamwork that foster collaboration and ensure the effective implementation of technology in patient care.

Selecting the right resume skills is important as it ensures you align with what employers expect from job seekers. Many companies use automated screening systems that filter out resumes lacking essential abilities, making it important to highlight your relevant skills.

To improve your chances of getting noticed, carefully analyze job postings for the specific skills listed by employers. This approach will guide you in prioritizing the competencies that recruiters and ATS systems consider most important, increasing your chances of landing an interview.

Pro Tip

10 skills that appear on successful data systems administration resumes

Improving your resume with high-demand skills in data systems administration can significantly improve your chances of catching a recruiter's eye. By showcasing these competencies, you demonstrate not only your qualifications but also your alignment with industry needs. You can see examples of these skills in action in our resume examples, which can boost your confidence when applying for jobs.

Here are 10 essential skills to consider incorporating into your resume if they align with your experience and job specifications:

Database management

Data analysis

Problem-solving

Attention to detail

Technical skill

Network configuration

Backup and recovery solutions

Cloud computing knowledge

Scripting languages skill

User support and training

Based on analysis of 5,000+ data systems administration professional resumes from 2023-2024

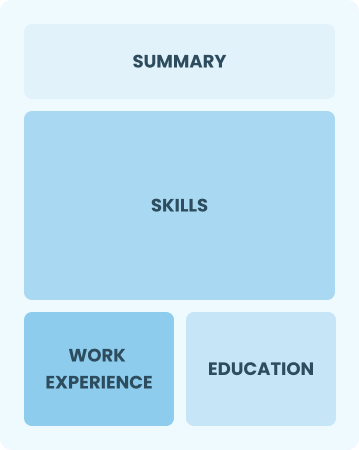

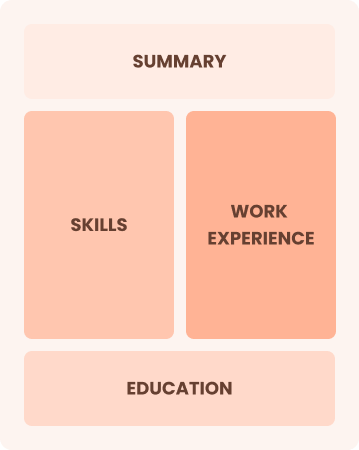

Resume Format Examples

Selecting the ideal resume format is important for showcasing your data systems administration expertise, as it effectively emphasizes your technical skills, relevant experience, and career growth in a clear manner.

Functional

Focuses on skills rather than previous jobs

Best for:

Recent graduates and career changers with 0-2 years of experience

Combination

Balances skills and work history equally

Best for:

Mid-career professionals focused on advancing their skills and career trajectory

Chronological

Emphasizes work history in reverse order

Best for:

Seasoned experts driving innovation in data management systems

Frequently Asked Questions

Should I include a cover letter with my data systems administration resume?

Absolutely, including a cover letter can significantly improve your application. It allows you to showcase your personality and clarify how your skills align with the job. If you're not sure how to write a cover letter, take a look at our easy-to-follow guide. For quick assistance, you can also use our Cover Letter Generator. Don’t miss out on this opportunity to make a strong impression!

Can I use a resume if I’m applying internationally, or do I need a CV?

When applying for jobs outside the U.S., use a CV instead of a resume to present your qualifications in detail. To assist you, we offer comprehensive resources on how to write a CV and formatting that align with international standards, ensuring your application stands out. Additionally, explore our CV examples to guide you in crafting an effective document.

What soft skills are important for data systems administrations?

Soft skills such as communication, problem-solving, and teamwork are essential in data systems administration. These interpersonal skills foster collaboration with colleagues and clients, ensuring that systems run smoothly and issues are resolved efficiently.

I’m transitioning from another field. How should I highlight my experience?

Highlight your transferable skills such as analytical thinking, communication, and project management. These abilities are important in data systems administration and show how you can add value even if your experience is limited. Use concrete examples from previous roles to illustrate your contributions and relate them to the responsibilities of the position you're applying for.

Where can I find inspiration for writing my cover letter as a data systems administration?

For those pursuing data systems administration roles, exploring professional cover letter examples can be incredibly beneficial. These samples provide inspiration for content ideas, offer formatting guidance, and illustrate how to effectively present your qualifications and career journey in a compelling way.

How should I format a cover letter for a data systems administration job?

To effectively format a cover letter, start by including your contact information at the top. Follow this with a professional greeting and an engaging opening paragraph that captures attention. In the body, highlight your relevant experience and skills, then conclude with a strong closing statement that encourages further discussion. Always tailor your content to align closely with the specific job requirements for data systems administration roles.