Hard skills like expertise in data modeling, ETL process design, and SQL querying are essential for an ETL developer to manage data effectively.

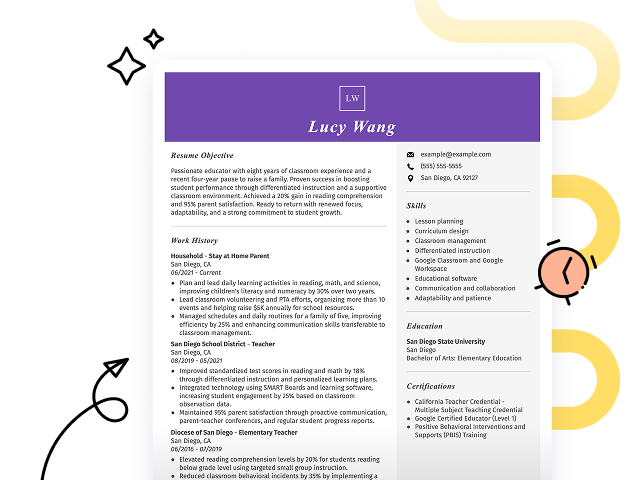

Popular ETL Developer Resume Examples

Check out our top ETL developer resume examples that emphasize key skills such as data integration, transformation processes, and database management. These examples highlight how to effectively showcase your technical expertise to potential employers.

Looking to build your own impressive resume? Our Resume Builder offers user-friendly templates specifically designed for tech professionals, helping you make a strong impression in your job applications.

Recommended

Entry-level ETL developer resume

This entry-level resume for an ETL Developer effectively highlights the applicant's technical skills and accomplishments in data processing and integration, showcasing their ability to improve system efficiency and accuracy. New professionals in this field must convey practical experience through relevant projects, certifications, and educational achievements to demonstrate their readiness for the role despite limited work history.

Mid-career ETL developer resume

This resume effectively showcases the job seeker’s extensive experience and technical skills in ETL development, demonstrating their capability for advanced responsibilities. The focus on automation and optimization highlights their readiness to lead complex projects and drive significant cost savings.

Experienced ETL developer resume

The work history section highlights the applicant's extensive experience as an ETL Developer, showcasing a strong ability to optimize processes and improve data accuracy. Notably, they reduced runtime by 30% and improved data accuracy by 25%, with clear formatting that improves readability for hiring managers.

Resume Template—Easy to Copy & Paste

Jin Martinez

Greenfield, IN 46145

(555)555-5555

Jin.Martinez@example.com

Professional Summary

ETL Developer with 9 years in data integration, improving processes, and boosting efficiency through automation. Expertise in SQL, Python, and ETL tools.

Work History

ETL Developer

DataSync Systems - Greenfield, IN

June 2023 - December 2025

- Improved data processing by 30% with ETL tools

- Reduced system downtime by 50% through automation

- Enhanced data accuracy, reducing errors by 25%

Data Integration Specialist

InfoTech Solutions - Greenfield, IN

May 2018 - May 2023

- Integrated data sources for 25+ projects annually

- Streamlined processes, boosting efficiency by 40%

- Designed ETL workflows, increasing throughput by 20%

Business Intelligence Analyst

Innovate Analytics - Indianapolis, IN

January 2016 - April 2018

- Analyzed trends, enhancing BI insights by 30%

- Managed data sets, standardizing formats efficiently

- Reduced data retrieval time by 15% with optimization

Skills

- ETL development

- Data integration

- SQL

- Data warehousing

- Python programming

- Automation

- Business intelligence

- Data visualization

Certifications

- Certified ETL Developer - Data Certification Institute

- Business Intelligence Professional - BI Professionals Society

Education

Master's Degree Computer Science

Tech University Boston, Massachusetts

May 2015

Bachelor's Degree Information Technology

State University Boston, Massachusetts

May 2013

Languages

- Spanish - Beginner (A1)

- French - Beginner (A1)

- Mandarin - Beginner (A1)

How to Write an ETL Developer Resume Summary

Your resume summary is the first opportunity to grab employers' attention, making it essential to craft a compelling introduction. As an ETL developer, you should highlight your technical skills in data integration and your experience with various ETL tools and methodologies. To better understand how to effectively present yourself, review these examples of strong resume summaries that illustrate what works well and what pitfalls to avoid:

I am an experienced ETL developer with a solid background in data integration and transformation. I am seeking a job that allows me to use my skills and grow within the company. A position that offers stability and chances for personal development would be perfect for me. I believe I can contribute positively if given the chance.

- Lacks specific details about technical skills or tools used, making it vague

- Focuses on personal desires rather than showcasing what the applicant brings to potential employers

- Uses generic phrases that do not highlight any unique strengths or achievements

Results-driven ETL Developer with over 6 years of experience in data integration and transformation for enterprise-level applications. Improved data processing efficiency by 30% through the implementation of optimized ETL workflows and real-time data validation techniques. Proficient in SQL, Python, and Informatica, with a strong focus on ensuring data accuracy and compliance with industry standards.

- Starts with clear experience level and highlights specific skills related to ETL development

- Showcases quantifiable achievement that illustrates a significant impact on performance metrics

- Mentions relevant technical tools and technologies that are critical for the role, demonstrating job readiness

Pro Tip

Showcasing Your Work Experience

The work experience section is important for your resume as an ETL developer, where you'll feature the bulk of your content. Good resume templates will always include this essential section to showcase your professional journey.

This part should be organized in reverse-chronological order, highlighting your previous roles and responsibilities. Use bullet points to detail specific achievements and contributions that demonstrate your skills in data integration and transformation.

To further assist you, we’ve prepared a couple of examples that illustrate effective entries for ETL developers. These examples will clarify what works well and what should be avoided:

ETL Developer

Tech Solutions Inc. – Austin, TX

- Managed data extraction from sources.

- Performed basic transformations on data.

- Collaborated with team members on projects.

- Assisted in loading data into systems.

- Lacks any mention of specific tools or technologies used

- Bullet points focus on generic tasks without showcasing skills or achievements

- No measurable results or impact of the work is highlighted

ETL Developer

Data Solutions Inc. – Austin, TX

March 2020 - Present

- Design and implement ETL processes that improved data processing efficiency by 30%, enabling timely access to critical business insights.

- Collaborate with stakeholders to gather requirements and deliver solutions that improved data accuracy by reducing errors by 25%.

- Train junior developers on best practices in ETL development, fostering a culture of continuous improvement and skill enhancement.

- Starts each bullet point with dynamic action verbs that clearly outline the applicant's contributions

- Incorporates specific metrics demonstrating improvements achieved through the job seeker's efforts

- Highlights relevant skills such as collaboration and mentorship that are essential for an ETL Developer

While your resume summary and work experience are important elements, don't overlook the importance of other sections. Each part plays a role in showcasing your qualifications. For more in-depth guidance on crafting your resume effectively, check out our detailed guide on how to write a resume.

Top Skills to Include on Your Resume

A skills section is a vital component of any resume, providing a quick overview of your qualifications. It improves the clarity of your professional profile, making it easier for employers to identify your capabilities at a glance.

For hiring managers, this section allows for rapid evaluation of applicant suitability based on essential job criteria. ETL developer professionals should highlight both technical and interpersonal skills that reflect their expertise and adaptability, which we will discuss in detail below.

Soft skills are essential for ETL developers, as effective communication, teamwork, and problem-solving abilities foster collaboration and ensure smooth data integration processes.

When crafting your resume skills, it's important to align them with what employers expect. Many organizations use automated systems that filter out job seekers who lack essential qualifications for the position.

To improve your chances of getting noticed, review job postings closely. They often highlight specific skills that recruiters and ATS systems are seeking, allowing you to tailor your resume effectively.

Pro Tip

10 skills that appear on successful ETL developer resumes

Make your resume stand out to recruiters by highlighting essential skills that are in high demand for ETL developer positions. You can see these skills effectively showcased in our resume examples, which will give you the confidence to apply with a professional approach.

Here are 10 skills you should consider incorporating into your resume if they align with your experience and job specifications:

Data integration

SQL skill

ETL tools expertise (e.g., Talend, Informatica)

Data warehousing concepts

Problem-solving abilities

Attention to detail

Programming knowledge (e.g., Python, Java)

Version control systems (e.g., Git)

Collaboration and teamwork

Analytical thinking

Based on analysis of 5,000+ web development professional resumes from 2023-2024

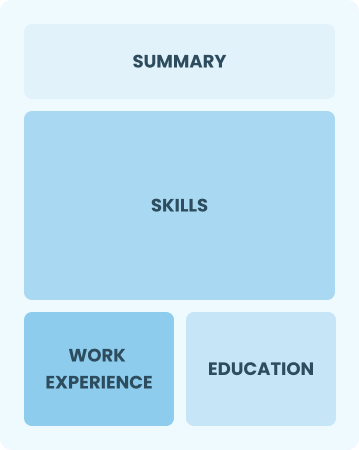

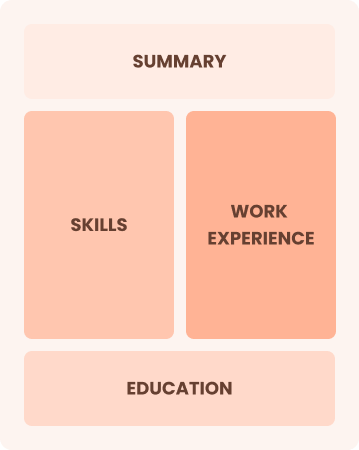

Resume Format Examples

Selecting the appropriate resume format is important for an ETL developer, as it showcases key technical skills and project experiences to effectively demonstrate your qualifications and career growth.

Functional

Focuses on skills rather than previous jobs

Best for:

Recent graduates and career changers with up to two years of experience

Combination

Balances skills and work history equally

Best for:

Mid-career professionals looking to leverage their skills and seek growth opportunities

Chronological

Emphasizes work history in reverse order

Best for:

Seasoned ETL developers leading complex data integration projects

Frequently Asked Questions

Should I include a cover letter with my ETL developer resume?

Absolutely, including a cover letter can significantly improve your application by allowing you to showcase your unique qualifications and enthusiasm for the position. It’s an excellent opportunity to connect with hiring managers on a personal level. If you need assistance, check out our resources on how to write a cover letter or use our Cover Letter Generator for quick results.

Can I use a resume if I’m applying internationally, or do I need a CV?

When applying for jobs internationally, a CV is often preferred over a resume due to its detailed nature. To ensure your CV meets the standards of your target country, explore our how to write a CV guides and CV examples that provide clear insights into effective creation.

What soft skills are important for ETL developers?

For an ETL developer, soft skills like problem-solving, attention to detail, and communication are important. These skills improve collaboration with data teams and streamline processes, ensuring efficient data integration and quality outcomes. Strong interpersonal skills foster a productive work environment and lead to better project success.

I’m transitioning from another field. How should I highlight my experience?

Highlight your transferable skills, such as analytical thinking, teamwork, and project management, when applying for ETL developer roles. These abilities showcase your potential to thrive in data environments, even if you lack direct experience. Share specific examples from previous jobs that illustrate how you've successfully tackled challenges or improved processes relevant to data transformation and integration.

How should I format a cover letter for an ETL developer job?

To format a cover letter for ETL developer positions, begin with your contact details and a professional greeting. Follow this with a compelling opening paragraph that captures the reader's attention. Include a summary of relevant skills and experiences that align with the job description. Always customize your content to reflect the specific requirements of the position, concluding with a strong call to action.

Should I include a personal mission statement on my ETL developer resume?

Yes, including a personal mission statement in your resume is advisable. It effectively showcases your values and career ambitions, which is particularly appealing to companies that prioritize culture and shared goals. This approach resonates well with organizations aiming for alignment in their team’s vision.