Hard skills, such as hard skills, include specific technical abilities like writing complex queries, database optimization, and data modeling that are essential for PL/SQL developers to succeed.

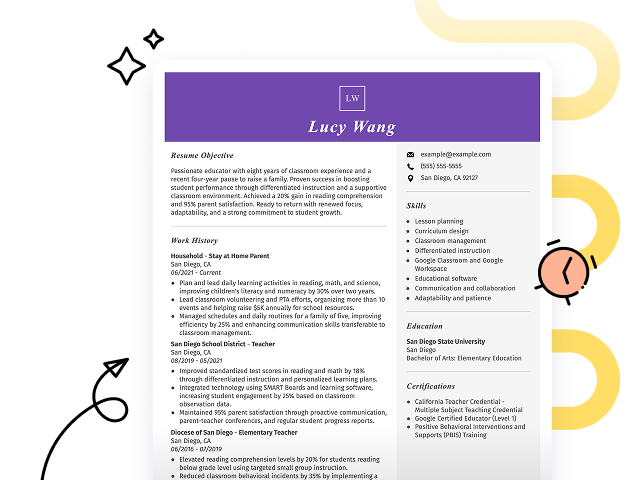

Popular PL/SQL Developer Resume Examples

Check out our top PL/SQL developer resume examples that emphasize critical skills such as database management, code optimization, and problem-solving abilities. These examples provide insight into effectively showcasing your technical achievements to potential employers.

Ready to build an impressive resume? Use our Resume Builder with user-friendly templates designed specifically for technology professionals, helping you create a standout application with ease.

Recommended

Entry-level PL/SQL developer resume

This entry-level resume for a PL/SQL developer effectively highlights the applicant's technical skills and notable accomplishments in database optimization and development. New professionals must showcase their ability to improve system performance and demonstrate relevant certifications to attract employer interest, even with limited direct work experience.

Mid-career PL/SQL developer resume

This resume effectively showcases the job seeker's extensive experience in database management, emphasizing accomplishments that reflect readiness for advanced roles. By highlighting efficiency improvements and mentoring junior developers, it positions the applicant as a proactive leader capable of tackling complex challenges.

Experienced PL/SQL developer resume

The work history section highlights the applicant’s extensive experience as a PL/SQL developer and database analyst, showcasing impressive achievements such as increasing query performance by 30% and managing over 200 databases securely. The clear bullet-point format improves readability, making it easy for potential employers to quickly assess relevant accomplishments.

Resume Template—Easy to Copy & Paste

Yuki Kim

Louisville, KY 40211

(555)555-5555

Yuki.Kim@example.com

Skills

- PL/SQL Programming

- Database Performance Optimization

- ETL Data Processes

- Stored Procedure Development

- Query Troubleshooting

- Data Migration Strategy

- SQL Query Debugging

- Database Security Enhancement

Certifications

- Oracle PL/SQL Developer Certified Associate - Oracle University

- Advanced SQL Programming Certification - DataCamp

- Database Design and Implementation Specialist - Microsoft

Languages

- Spanish - Beginner (A1)

- German - Beginner (A1)

- French - Beginner (A1)

Professional Summary

Driven PL/SQL Developer with 8 years of experience in database optimization, programming, and data migration. Proven track record of improving system performance by up to 40%. Skilled in ETL workflows, query debugging, and advanced SQL practices.

Work History

PL/SQL Developer

TechInsight Solutions - Louisville, KY

January 2023 - December 2025

- Optimized database queries, increasing performance by 30%.

- Developed PL/SQL scripts for data migration of 2M+ records.

- Implemented stored procedures, reducing processing time by 15%.

Database Analyst

DataBridge Systems - Louisville, KY

June 2019 - December 2022

- Automated report generation, saving 10 hours weekly.

- Designed ETL workflows handling 500GB data daily.

- Enhanced data integrity by integrating validation scripts.

SQL Programmer

NextGen Data Solutions - Crestwood, KY

January 2017 - May 2019

- Created database triggers improving system security by 25%.

- Resolved data inconsistencies affecting 3+ key applications.

- Developed complex queries improving analytics accuracy.

Education

Master's in Computer Science Data Management and Analysis

University of Texas Austin, Texas

May 2016

Bachelor's in Information Technology Database Systems

University of Washington Seattle, Washington

May 2014

How to Write a PL/SQL Developer Resume Summary

Your resume summary is the first opportunity to catch an employer's attention, making it important for setting the tone of your application. As a PL/SQL developer, you'll want to highlight your technical skills and experience with database management and programming languages.

This section should emphasize your skill in writing efficient queries, optimizing performance, and solving complex problems. Showcasing these abilities will demonstrate your value to potential employers.

To further illustrate what makes a strong resume summary, let's look at some examples that effectively highlight key strengths and qualifications for PL/SQL developers:

I am a PL/SQL developer with several years of experience. I want to find a job where I can use my skills and be part of a good team. I hope to work at a company that values its employees and offers good benefits. I believe I can contribute positively if hired.

- Lacks specific details about the job seeker's technical skills or achievements in PL/SQL development

- Emphasizes personal desires over what value the job seeker brings to potential employers

- Uses generic language without demonstrating unique qualifications or experiences relevant to the role

Results-driven PL/SQL developer with over 6 years of experience in database design and optimization for large-scale applications. Improved query performance by 30% through the implementation of indexing strategies and optimized stored procedures. Proficient in Oracle Database, SQL tuning, and data modeling, with a strong focus on delivering efficient solutions that improve system reliability.

- Starts with specific experience level and expertise in PL/SQL development

- Highlights quantifiable achievements that showcase significant improvements in system performance

- Demonstrates relevant technical skills essential for the role, emphasizing problem-solving capabilities

Pro Tip

Showcasing Your Work Experience

The work experience section is important for your resume as a PL/SQL developer, where you'll present the majority of your qualifications. A well-structured resume template will always emphasize this important section.

This area should be organized in reverse-chronological order, detailing your previous positions. Use bullet points to highlight key achievements and significant contributions in each role.

Now, let’s look at some examples that effectively illustrate how to present your work history as a PL/SQL developer. These examples will clarify what stands out and what should be avoided:

PL/SQL Developer

Tech Solutions Inc. – San Francisco, CA

- Wrote SQL queries for databases.

- Collaborated with team members on projects.

- Maintained database systems.

- Assisted in troubleshooting issues.

- No details about the employment dates

- Bullet points lack specificity and measurable impacts

- Emphasizes routine tasks rather than highlighting achievements or contributions

PL/SQL Developer

Tech Innovations Inc. – San Francisco, CA

March 2020 - Present

- Develop and optimize PL/SQL code for enterprise-level applications, leading to a 30% reduction in query execution time.

- Collaborate with cross-functional teams to design database solutions that improved data integrity and reduced redundancy by 40%.

- Mentor junior developers on best practices in PL/SQL programming, improving team productivity and coding standards.

- Starts each bullet point with strong action verbs that highlight the applicant's contributions

- Incorporates specific metrics that demonstrate the effectiveness of the job seeker's work

- Showcases relevant technical skills while illustrating collaboration and mentorship

While your resume summary and work experience are important components, don't overlook the importance of other sections. Each part plays a role in showcasing your skills. For more detailed guidance on crafting an effective resume, explore our comprehensive guide on how to write a resume.

Top Skills to Include on Your Resume

Including a skills section on your resume is important as it provides a snapshot of your qualifications, making it easier for employers to gauge your fit for the role. This section serves as a direct link between your capabilities and the specific needs of the job.

Hiring managers often look at this part first, allowing them to quickly evaluate whether you meet the essential criteria. For PL/SQL developer professionals, showcasing both technical skills and interpersonal attributes can improve your appeal, which will be discussed further below.

Soft skills are important for PL/SQL developers as they foster effective communication, teamwork, and problem-solving abilities essential for successful project outcomes and collaboration with stakeholders.

When selecting skills for your resume, it’s important to align them with what employers expect from ideal applicants. Many organizations use applicant tracking systems (ATS) that filter out resumes lacking essential resume skills.

To ensure your resume stands out, carefully review job postings for insights into which resume skills are most valued. This practice not only helps you attract recruiters' attention but also boosts your chances of passing through ATS filters successfully.

Pro Tip

10 skills that appear on successful PL/SQL developer resumes

To make a strong impression on recruiters, it's essential to highlight the most sought-after skills for PL/SQL developer roles. These skills can be seen in our resume examples, giving you the confidence to apply effectively.

By the way, incorporating relevant skills that align with your experience and job requirements will improve your resume. Consider adding these 10 skills:

Database design

SQL skill

Performance tuning

Data modeling

PL/SQL programming

Troubleshooting and debugging

Version control systems

Analytical thinking

Collaboration and teamwork

Attention to detail

Based on analysis of 5,000+ web development professional resumes from 2023-2024

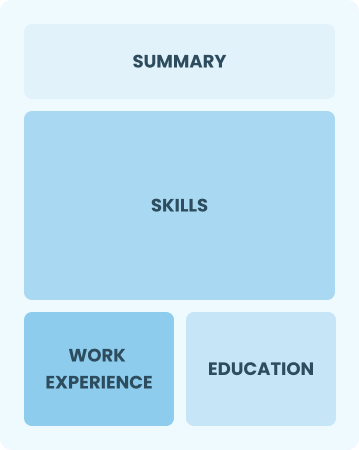

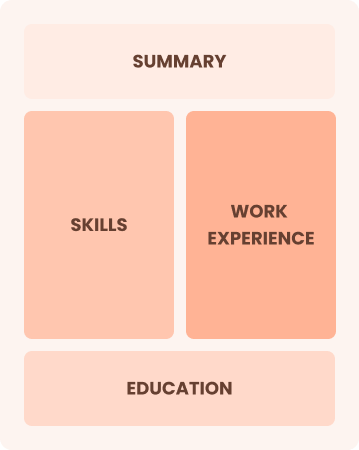

Resume Format Examples

Selecting the appropriate resume format is important for a PL/SQL developer, as it effectively showcases your technical skills, relevant experience, and career advancements in a clear and compelling way.

Functional

Focuses on skills rather than previous jobs

Best for:

Recent graduates and career changers with up to two years of experience

Combination

Balances skills and work history equally

Best for:

Mid-career developers eager to demonstrate their technical skills and growth potential

Chronological

Emphasizes work history in reverse order

Best for:

Seasoned developers leading innovative PL/SQL projects and teams

Frequently Asked Questions

Should I include a cover letter with my PL/SQL developer resume?

Absolutely, submitting a cover letter can make your application more impressive by showcasing your specific skills and enthusiasm for the position. For assistance in crafting an effective cover letter, explore our comprehensive guide on how to write a cover letter, or use our easy-to-use Cover Letter Generator to streamline the process.

Can I use a resume if I’m applying internationally, or do I need a CV?

When applying for jobs outside the U.S., use a CV instead of a resume. A CV provides a comprehensive overview of your career and qualifications, which is often required in many countries. Check out our detailed guides on how to write a CV and explore various CV examples to help you format and create an effective CV.

What soft skills are important for PL/SQL developers?

For a PL/SQL developer, soft skills like problem-solving, communication, and adaptability are essential. These interpersonal skills foster collaboration with team members and clients, enabling effective project execution and troubleshooting. By nurturing these abilities, developers can improve teamwork and ensure successful outcomes in their projects.

I’m transitioning from another field. How should I highlight my experience?

Highlight your transferable skills such as analytical thinking, teamwork, and attention to detail when applying for PL/SQL developer positions. These abilities demonstrate your potential to excel in the role, even if your previous experience is in a different field. Use concrete examples from past jobs to show how these skills are relevant to database development and management tasks.

Should I use a cover letter template?

Yes, using a cover letter template for a PL/SQL developer position is advisable because it provides a clear structure to organize your experience with database management, optimization skills, and relevant projects, helping you effectively showcase your qualifications to hiring managers.

How do I add my resume to LinkedIn?

To improve your professional presence as a PL/SQL developer, add your resume to LinkedIn by posting it in the "Featured" section or embedding key accomplishments within your profile. This strategy helps tech recruiters and hiring managers identify skilled applicants, streamlining their search for expertise in database management.